-

Notifications

You must be signed in to change notification settings - Fork 500

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Allow upgrades across versions #4980

Comments

|

@djbrooke I like the idea of releasing more often to get fixes and features out to the community! I think there's a couple ways of approaching the situation and the desired solution may actually be a combination of what I'm thinking. As you mentioned, if there's more frequent releases (ideally) sysadmins should be able to skip ahead versions. The more I think about it, the more I feel this is a requirement rather than a nice to have. The reason being is so sysadmins aren't overburdened with upgrading to every intermediate patch or minor to get to the latest patch or minor they're actually interested in/may need. Basically, if the requirement is to upgrade to every intermediate version and they're a bit behind with releases, it (potentially) disincentivizes sysadmins to prioritize upgrades in their workload further causing more pain down the line when it needs to be done, making the faster release schedule somewhat moot, etc. With that being said I don't know the level of effort required to implement this for the project... There's also the consideration of having the ability to revert to the previously deployed version if needed. Also, food for thought, what if the project leveraged pre-releases to allow for those bug fixes/features to get out quickly? Basically you could have pre-release 4.9.2-0.x.x (following semver) and just bump that and keep your current release schedule? Thoughts? |

|

I'll second Andrew's plug for Semantic Versioning but I personally don't find stepping through each release prohibitive as I love my archivists and our archive. I think I can safely speak for Odum in saying that we wait for Harvard to upgrade, and for Thu-Mai and Mandy to have used any new version heavily there, before we consider upgrading. We love "known-good" releases (such as 4.7.1 and 4.8.6, or if you've ever administered a NetApp, 7.0.4, wistfully referred to by their field engineers). So I think I too am lobbying for fewer production releases with heavier pre-release testing. |

|

I think it makes sense to support skipping incremental versions during upgrade (potentially only minor versions). If possible, one other factor that could encourage more frequent upgrading would be to support a "mayday" fall-back to a previous "known-good" version (without requiring a database/index restore). There are a number of articles discussing blue/green deployments that might be at least partly applicable here. |

|

We met at tech hours today and decided as a first step (and the scope of this issue) that we will extract the db scripts that get created when you deploy, then write a new script that takes in two parameters, startVersion and endVersion, and it will generate a script that will do all the db changes required, including:

We may optionally include the following in the script, if straightforward:

This seems a good first step, as most of the challenges of upgrading across version stem from db related changes. After we have this, we can open other issues for other needed solutions. |

|

Here are the raw notes from our tech hours discussion on this issue. Gustavo's summary has come out of this discussion:

|

|

FWIW: Given that the normal instructions have you delete the generated dir, I'm not sure that the actual deployment of the intermediate wars does anything. For TDL, I've been successful in basically applying the non-war instructions for each version sequentially and then deploying the final war.I did that for 4.8.4-4.8.6 and then for 4.8.6-4.9.3 (just on the dev/test machine so far.) Doing things sequentially is critical - later scripts sometimes update things created in prior patches. And there are cases where doing all the steps is not necessary but harmless. For example, the 4.8.5-6 upgrade script modifies a table that was only created if you deployed 4.8.5, so the sql gives warnings, but the 4.8.6 creates the table correctly to start (presumably because the scripts in /generated get updated). Similarly, it appears that the instruction to sync the preprocess.R file between TwoRavens and Dataverse is no longer needed/valid in 4.9.3, so syncing them before deploying the 4.9.3 war works (because 4.9.3 writes its new version), but the sync itself isn't needed. There are also things like the 4.9 note to generate DOIs for existing published files that, if one is going straight to 4.9.3 should not be done at all. (So far, this seems like an outlier, but for the general case, we should watch for things where sequential upgrades would NOT work.) |

|

@qqmyers good examples. Thanks for your input. You seem to grok the situation quite well. The SQL update scripts we've been writing for years never have "add table" statements in them. We figure the war file will add the tables (with warnings, unfortunately, which is what #4920 is about). So one of the main deliverables of this issue is capturing the "createDDL" file mentioned above as part of our release process. This will probably benefit #4040 because within OpenShift the process of starting Glassfish just for the purpose of deploying the war file to create tables is time consuming. With the createDDL file available in the future, we can hopefully speed this up because the postgres container could just use the createDDL directly without involving Glassfish /cc @danmcp @thaorell . This is the way @craig-willis did it as the first person to Dockerize Dataverse. See https://github.com/nds-org/ndslabs-dataverse/blob/4.7/dockerfiles/README.md#generating-ddl says the following.

Oh and while I'm thinking of it, as I just mentioned at https://groups.google.com/d/msg/dataverse-community/Tyfz7d5xQ24/EN9OfGPlAQAJ we we currently say, "When upgrading within Dataverse 4.x, you will need to follow the upgrade instructions for each intermediate version" at http://guides.dataverse.org/en/4.9.3/installation/upgrading.html so we should update this text as part of the pull request for this issue. |

|

@poikilotherm yes, we are suffering from migration hell. 😄 I brought up Flyway at tech hours on Tuesday (and I was looking briefly again at the Liquibase website) but the idea was dismissed and I can't remember exactly why. Do you have experience with either of these tools or others? In the room there were people who have experience with Django's "migrations" feature ( https://docs.djangoproject.com/en/2.1/topics/migrations/ ) which apparently "just works". It would be nice to have something similar for Java and JPA (Java Persistence API). |

|

I have been working with Django migrations and used MongoDB related Java migration stuff a bit. Currently cannot call myself an expert in this field, but I am aware that using this kind of tooling is state of the art and bullet-proof industry grade stuff. I can just encourage every dev to read up these notes by Scott Allen: https://odetocode.com/blogs/scott/archive/2008/01/30/three-rules-for-database-work.aspx Flyway and Liquibase seem to be the prominent big players in this field. Good 4 minute readup: https://reflectoring.io/database-refactoring-flyway-vs-liquibase |

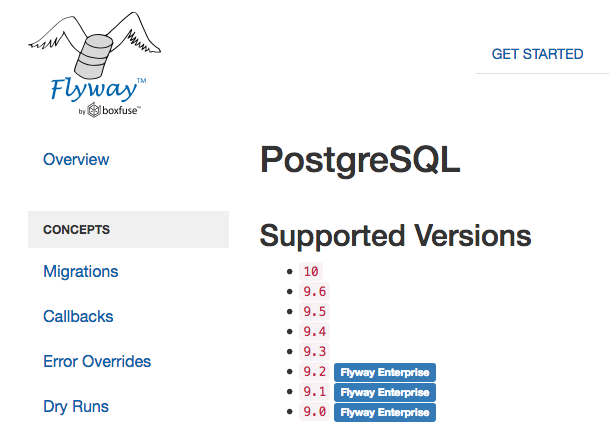

@pdurbin because I thought I remembered that the open source version of Flyway meant you were locked into whatever the most recently released postgres version was. |

|

@pameyer what do you mean by locked in? You are locked into Postgres 9.6 anyway right now, aren't you? If this dependency was changed to Postgres 10 or 11, this would mean an upgrade outside of Dataverse anyway (maybe using Flyway CLI) either via dump/restore or About Flyway + Postgres compatibility: https://flywaydb.org/documentation/database/postgresql |

|

Interesting. Here's a screenshot from https://flywaydb.org/documentation/database/postgresql (thanks for the link): When you click "download/pricing" at https://flywaydb.org/download they call this feature of Enterprise Edition "Older database versions compatibility". |

|

Not specifying how this is done, but in favor of easier upgrading to the most recent version without having to step through each individual release. |

… (and checking in the first scripts, for the 4.9* versions). (#4980)

|

I agree about using flyway, I have used it before and it makes migration very easy, it basically has another db table which track which sql's were executed before. |

|

I pulled this out of QA so we can discuss @MrK191's comment above about flyway. In IRC he sounded wiling to make a pull request: http://irclog.iq.harvard.edu/dataverse/2018-11-21#i_79702 Also, no information has get been added to the "making releases" page. See #5317 (comment) |

|

This is close to being merged and we should not increase scope. Moving back to QA. @MrK191 - can you please create a separate issue about Flyway? |

|

Perfect, thanks |

|

This works, tested upgrade from v4.0 to v4.9.4 with existing data. Passing back to @landreev to update doc. |

…grade-across-versions script. (#4980)

|

I was still working on the extra documentation under "making releases", yes. (checked in now) |

|

@landreev I made flyway work so I can make PR. The issue with scripting is that it complicates automatic deployment and it adds additional manual step for something that can be automated. |

I want to release as often as possible in order to get valuable features and bug fixes out to the community. There's some tension though, as more releases can create a burden for sysadmins in the community, as we recommend that upgraders step through each Dataverse release on their way to the most recent version. My thought is to allow easier upgrading to the most recent version, but any other thoughts or proposals are welcome, especially from the sysadmins in the community.

Tagging @scolapasta for a possible tech hours discussion.

The text was updated successfully, but these errors were encountered: