Releases: oven-sh/bun

Canary (dbd320ccfa909053f95be9e1643d80d73286751f)

This release of Bun corresponds to the commit: c1708ea

Bun v0.1.4

To upgrade:

bun upgradeIf you have any problems upgrading

Run this:

curl https://bun.sh/install | bashBug fixes

- Fixed a GC bug with

fetch(url)that frequently caused crashes - fix(env_loader): Ignore spaces before equals sign by @FinnRG in #602

Misc:

- Create share image for social media by @gabrnunes in #629

- feat(landing): create github action rebuilding the site on changes by @pnxdxt in #651

- cleanup benchmarks folder by @evanwashere in #587

Typos, README, examples:

- Fixed broken links on the Bun.serve benchmark by @BE-CH in #590

- updated README.md by @rudrakshkarpe in #599

- chore(docs): add comma by @josesilveiraa in #580

- Added note of AVX2 requirement and mentioned Intel SDE workaround in README.md by @deijjji303 in #346

- builtin -> built-in by @boehs in #585

- docs(various):

.mdreadability improvements by @ryanrussell in #597 - docs: remove double v of version by @pnxdxt in #601

- Updated logLevel to include

debugin readme by @biw in #189 - refactor(exports.zig): Fix WebSocketHTTPSClient var name by @ryanrussell in #598

- fix: Support specifying a JSON response type in bun.d.ts by @rbargholz in #563

- hono example with typescript by @Jesse-Lucas1996 in #577

- fix

blanktemplate by @SheetJSDev in #523 - fix(examples/hono): Update package name by @FinnRG in #620

- chore(landing): build changes by @pnxdxt in #626

- Fix "coercion" spelling by @Bellisario in #628

- fix benchmark urls on landing page by @evanwashere in #636

- fix #638 by @adi611 in #639

- fix #640 by @adi611 in #641

New Contributors

- @BE-CH made their first contribution in #590

- @rudrakshkarpe made their first contribution in #599

- @josesilveiraa made their first contribution in #580

- @deijjji303 made their first contribution in #346

- @pnxdxt made their first contribution in #601

- @biw made their first contribution in #189

- @rbargholz made their first contribution in #563

- @Jesse-Lucas1996 made their first contribution in #577

- @SheetJSDev made their first contribution in #523

- @adi611 made their first contribution in #639

- @gabrnunes made their first contribution in #629

Full Changelog: bun-v0.1.3...bun-v0.1.4

bun v0.1.3

What's new:

alert(),confirm(),prompt()are new globals, thanks to @sno2- more comprehensive type definitions, thanks to @Snazzah

- Fixed

console.log()sometimes adding an "n" to non-BigInt numbers thanks to @FinnRG - Fixed

subarray()console.log bug - TypedArray logs like in node now instead of like a regular array

- Migrate to Zig v0.10.0 (HEAD) by @alexkuz in #491

console.log(request)prints something instead of nothing- fix

performance.now()returning nanoseconds instead of milliseconds @Pruxis - update wordmark in bun-error @Snazzah

All the PRs:

- fix: add unzip is required information by @MoritzLoewenstein in #305

- feat: new blank template by @xhyrom in #289

- fix(templates/react): Add a SVG type definition by @FinnRG in #427

- Further landing accessibility improvements by @Tropix126 in #330

- Fix react example README typo. by @keidarcy in #433

- style(napi): cleanup commented out code by @xhyrom in #435

- feat: update default favicon to new logo by @Snazzah in #477

- Add SVG logo and SEO improvements to landing by @Snazzah in #417

- Fix illegal instruction troubleshooting steps by @ziloka in #400

- Migrate to Zig v0.10.0 (HEAD) by @alexkuz in #491

- fix: Remove unnecessary n while formatting by @FinnRG in #508

- fix: Append n when printing a BigInt by @FinnRG in #509

- Fix grammar erros in README.md by @jakemcf22 in #476

- typo by @pvinis in #483

- README.md typo fix by @JL102 in #449

- fix dotenv config snippet by @JolteonYellow in #436

- add partial node:net polyfill by @evanwashere in #516

- fix: update build files to latest Zig version by @sno2 in #522

- Update Bun CLI version typo to v0.1.2 by @b0iq in #503

- update bash references to work in non-fhs compliant distros by @lucasew in #502

- bugfix: performance.now function should return MS instead of nano by @Pruxis in #428

- feat: add issue templates by @hisamafahri in #466

- refactor(websockets): Rename

connectedWebSocketContext()by @ryanrussell in #459 - feat(bun-error): update "powered by" logo to use new bun wordmark by @Snazzah in #536

- Remove unnecessary

Output.flushs beforeGlobal.exitandGlobal.crashby @r00ster91 in #535 - bun-framework-next README.md clarification by @Scout2012 in #534

- Fix "operations" word spelling by @Bellisario in #543

- Update GitHub URL to match new repo URL by @auroraisluna in #547

- fix: remove unnecessary quotes in commit message by @dkarter in #464

- chore: update issue templates by @xhyrom in #544

- Fix macOS build by @thislooksfun in #525

- add depd browser polyfill by @evanwashere in #517

- Updated typo in example by @rml1997 in #573

- Fix: NotSameFileSystem at clonefile by @adi-g15 in #546

- Cleanup discord-interactions readme by @CharlieS1103 in #451

- Fixes typo in src/c.zig by @gabuvns in #568

- feat(types): Add types for node modules and various fixing by @Snazzah in #470

- Revert "Fix: NotSameFileSystem at clonefile" by @Jarred-Sumner in #581

- feat(core): implement web interaction APIs by @sno2 in #528

New Contributors

- @MoritzLoewenstein made their first contribution in #305

- @xhyrom made their first contribution in #289

- @FinnRG made their first contribution in #427

- @Tropix126 made their first contribution in #330

- @keidarcy made their first contribution in #433

- @Snazzah made their first contribution in #477

- @ziloka made their first contribution in #400

- @jakemcf22 made their first contribution in #476

- @pvinis made their first contribution in #483

- @JL102 made their first contribution in #449

- @JolteonYellow made their first contribution in #436

- @sno2 made their first contribution in #522

- @b0iq made their first contribution in #503

- @lucasew made their first contribution in #502

- @Pruxis made their first contribution in #428

- @hisamafahri made their first contribution in #466

- @ryanrussell made their first contribution in #459

- @r00ster91 made their first contribution in #535

- @Scout2012 made their first contribution in #534

- @Bellisario made their first contribution in #543

- @auroraisluna made their first contribution in #547

- @dkarter made their first contribution in #464

- @thislooksfun made their first contribution in #525

- @rml1997 made their first contribution in #573

- @adi-g15 made their first contribution in #546

- @CharlieS1103 made their first contribution in #451

- @gabuvns made their first contribution in #568

Full Changelog: bun-v0.1.2...bun-v0.1.3

Bun v0.1.2

To upgrade:

bun upgradeIf you have any problems upgrading

Run this:

curl https://bun.sh/install | bashWhat's Changed

bun install:

- Fix

error: NotSameFileSystem - When the Linux kernel version doesn't support io_uring, print instructions for upgrading to the latest Linux kernel on Windows Subsystem for Linux

bun.js:

- Export

randomUUIDincryptomodule by @WebReflection in https://github.com/Jarred-Sumner/bun/pull/254 napi_get_versionshould return the Node-API version by @kjvalencik in https://github.com/Jarred-Sumner/bun/pull/392

bun dev:

- Fix crash on running

bun devon linux on some machines (@egoarka) https://github.com/Jarred-Sumner/bun/pull/316

Examples:

- Minor updates to the Next.js example app by @Nutlope in https://github.com/Jarred-Sumner/bun/pull/389

- Fixing issues with example app READMEs by @Nutlope in https://github.com/Jarred-Sumner/bun/pull/397

- Add RSC to list of unsupported Next.js features in README by @Nutlope in https://github.com/Jarred-Sumner/bun/pull/334

- adding a template .gitignore by @MrBaggieBug in https://github.com/Jarred-Sumner/bun/pull/232

Landing page:

- Fix: long numbers + unused css by @michellbrito in https://github.com/Jarred-Sumner/bun/pull/367

- fix a11y issues on landing by @alexkuz in https://github.com/Jarred-Sumner/bun/pull/225

- Add a space in page.tsx by @eyalcohen4 in https://github.com/Jarred-Sumner/bun/pull/264

Internal:

- Allow setting LLVM_PREFIX and MIN_MACOS_VERSION as environment variables by @aslilac in https://github.com/Jarred-Sumner/bun/pull/237

- Use Node.js v18.x from NodeSource to use string.replaceAll method by @hnakamur in https://github.com/Jarred-Sumner/bun/pull/268

- refactor: wrap BigInt tests in describe block by @jsjoeio in https://github.com/Jarred-Sumner/bun/pull/278

- Add needed dependencies to Makefile devcontainer target by @hnakamur in https://github.com/Jarred-Sumner/bun/pull/269

- [strings] Fix typo in string_immutable.zig by @eltociear in https://github.com/Jarred-Sumner/bun/pull/274

README:

- Add troubleshooting for old intel CPUs by @coffee-is-power in https://github.com/Jarred-Sumner/bun/pull/386

- docs: add callout for typedefs with TypeScript by @josefaidt in https://github.com/Jarred-Sumner/bun/pull/276

- Added label for cpu by @isaac-mcfadyen in https://github.com/Jarred-Sumner/bun/pull/322

- chore(examples): Updates start doco #326 by @mrowles in https://github.com/Jarred-Sumner/bun/pull/328

- fix devcontainer starship installation by @shanehsi in https://github.com/Jarred-Sumner/bun/pull/345

- Update README.md by @PyBaker in https://github.com/Jarred-Sumner/bun/pull/370

- Add Bun logo in README by @DanielTolentino in https://github.com/Jarred-Sumner/bun/pull/228

- Fix

Safari's implementationbroken link by @F3n67u in https://github.com/Jarred-Sumner/bun/pull/257 - docs: Fix broken toc link by @hyp3rflow in https://github.com/Jarred-Sumner/bun/pull/218

New Contributors

- @addy made their first contribution in https://github.com/Jarred-Sumner/bun/pull/207

- @styfle made their first contribution in https://github.com/Jarred-Sumner/bun/pull/212

- @lucacasonato made their first contribution in https://github.com/Jarred-Sumner/bun/pull/215

- @logikaljay made their first contribution in https://github.com/Jarred-Sumner/bun/pull/236

- @intergalacticspacehighway made their first contribution in https://github.com/Jarred-Sumner/bun/pull/245

- @aslilac made their first contribution in https://github.com/Jarred-Sumner/bun/pull/237

- @F3n67u made their first contribution in https://github.com/Jarred-Sumner/bun/pull/257

- @WebReflection made their first contribution in https://github.com/Jarred-Sumner/bun/pull/254

- @MrBaggieBug made their first contribution in https://github.com/Jarred-Sumner/bun/pull/232

- @DanielTolentino made their first contribution in https://github.com/Jarred-Sumner/bun/pull/228

- @jsjoeio made their first contribution in https://github.com/Jarred-Sumner/bun/pull/278

- @hyp3rflow made their first contribution in https://github.com/Jarred-Sumner/bun/pull/218

- @hnakamur made their first contribution in https://github.com/Jarred-Sumner/bun/pull/269

- @egoarka made their first contribution in https://github.com/Jarred-Sumner/bun/pull/316

- @josefaidt made their first contribution in https://github.com/Jarred-Sumner/bun/pull/276

- @isaac-mcfadyen made their first contribution in https://github.com/Jarred-Sumner/bun/pull/322

- @mrowles made their first contribution in https://github.com/Jarred-Sumner/bun/pull/328

- @eyalcohen4 made their first contribution in https://github.com/Jarred-Sumner/bun/pull/264

- @Nutlope made their first contribution in https://github.com/Jarred-Sumner/bun/pull/334

- @eltociear made their first contribution in https://github.com/Jarred-Sumner/bun/pull/274

- @shanehsi made their first contribution in https://github.com/Jarred-Sumner/bun/pull/345

- @PyBaker made their first contribution in https://github.com/Jarred-Sumner/bun/pull/370

- @michellbrito made their first contribution in https://github.com/Jarred-Sumner/bun/pull/367

- @coffee-is-power made their first contribution in https://github.com/Jarred-Sumner/bun/pull/386

- @kjvalencik made their first contribution in https://github.com/Jarred-Sumner/bun/pull/392

Full Changelog: Jarred-Sumner/bun@bun-v0.1.1...bun-v0.1.2

Bun v0.1.1

What's New

- Web Streams -

ReadableStream,WritableStream,TransformStreamand more. This was a massive project. There are still some reliability things to fix, but I'm very happy with the performance, and I think you will be too. WebSocketis now a global, powered by a custom WebSocket client- Dynamic

require() - 50% faster TextEncoder

- ~30% faster React rendering in production due to a JSX transpiler optimization

- Streaming React SSR is now supported

- Dozens of small bug fixes to the HTTP server

- Discord interactions template by @evanwashere in https://github.com/Jarred-Sumner/bun/pull/2

- Group zsh completion options by type by @alexkuz in https://github.com/Jarred-Sumner/bun/pull/194

New Contributors

- @mjavadhpour made their first contribution in https://github.com/Jarred-Sumner/bun/pull/179

- @eswr made their first contribution in https://github.com/Jarred-Sumner/bun/pull/201

Full Changelog: Jarred-Sumner/bun@bun-v0.0.83...bun-v0.1.0

bun v0.0.83

To upgrade:

bun upgradeHaving trouble upgrading?

You can also try the install script.

curl https://bun.sh/install | bashThanks to:

- @kriszyp for tons of helpful feedback on how to improve Node-API support in bun

- @evanwashere for the idea & name for the

CFunctionabstraction - @adaptive for replacing

"wrangler@beta"with"wrangler"in the examples forbun add - @littledivy for adding a couple missing packages to the build instructions

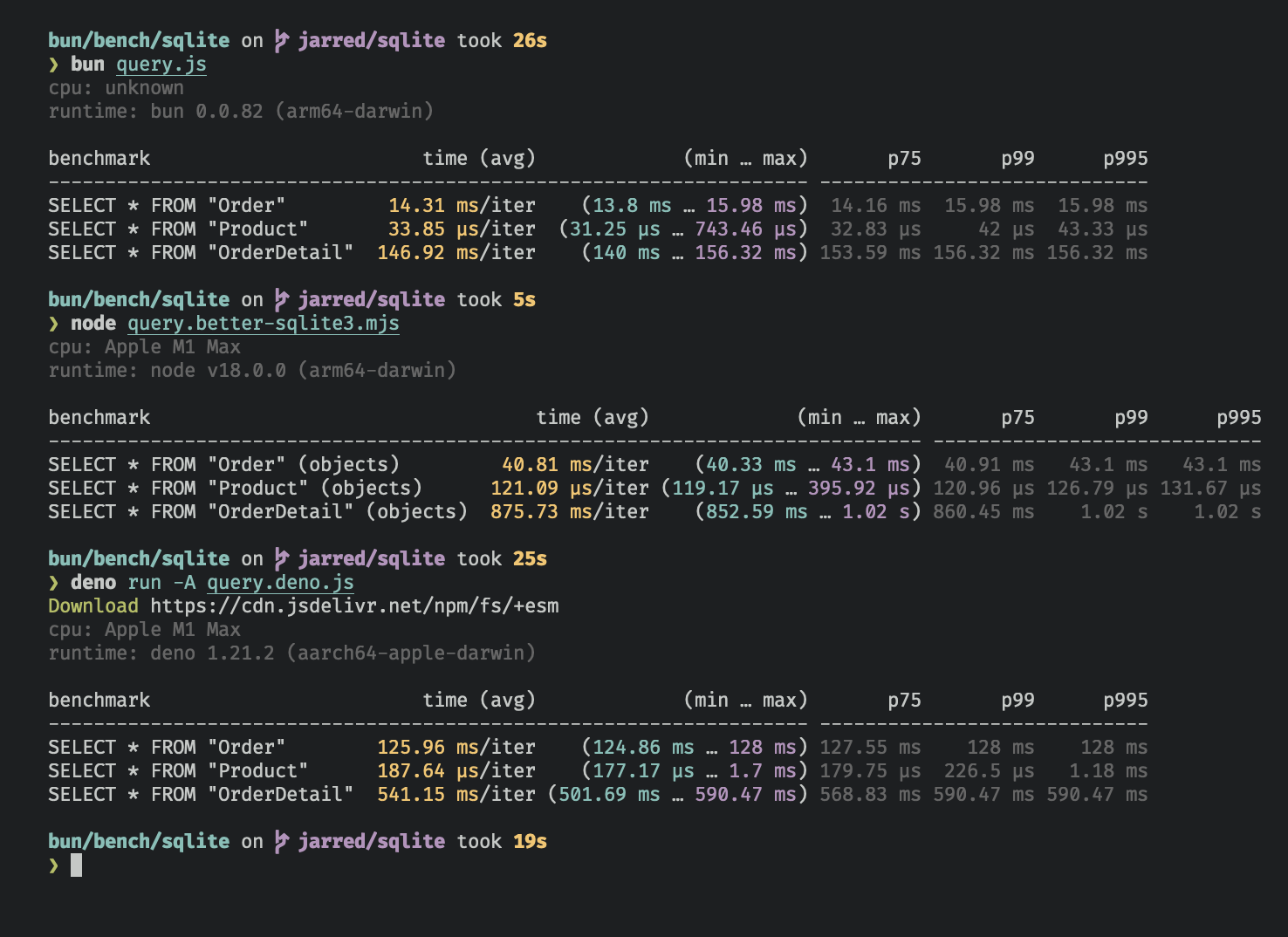

bun:sqlite

bun:sqlite is a high-performance builtin SQLite module for bun.js.

It tends to be around 3x faster than the popular better-sqlite3 npm package

Note: in the benchmark I tweeted earlier, better-sqlite3 always returned arrays of arrays rather than arrays of objects, which was inconsistent with what bun:sqlite & deno's x/sqlite were doing

Usage

import { Database } from "bun:sqlite";

const db = new Database("mydb.sqlite");

db.run(

"CREATE TABLE IF NOT EXISTS foo (id INTEGER PRIMARY KEY AUTOINCREMENT, greeting TEXT)"

);

db.run("INSERT INTO foo (greeting) VALUES (?)", "Welcome to bun!");

db.run("INSERT INTO foo (greeting) VALUES (?)", "Hello World!");

// get the first row

db.query("SELECT * FROM foo").get();

// { id: 1, greeting: "Welcome to bun!" }

// get all rows

db.query("SELECT * FROM foo").all();

// [

// { id: 1, greeting: "Welcome to bun!" },

// { id: 2, greeting: "Hello World!" },

// ]

// get all rows matching a condition

db.query("SELECT * FROM foo WHERE greeting = ?").all("Welcome to bun!");

// [

// { id: 1, greeting: "Welcome to bun!" },

// ]

// get first row matching a named condition

db.query("SELECT * FROM foo WHERE greeting = $greeting").get({

$greeting: "Welcome to bun!",

});

// [

// { id: 1, greeting: "Welcome to bun!" },

// ]There are more detailed docs in Bun's README

bun:sqlite's API is loosely based on @joshuawise's better-sqlite3

New in bun:ffi

CFunction lets you call native library functions from a function pointer.

It works like dlopen but its for cases where you already have the function pointer so you don't need to open a library. This is useful for:

- callbacks passed from native libraries to JavaScript

- using Node-API and bun:ffi together

import {CFunction} from 'bun:ffi';

const myNativeLibraryGetVersion: number | bigint = /* Somehow you got this function pointer */

const getVersion = new CFunction({

returns: "cstring",

args: [],

// ptr is required

// this is where the function pointer goes!

ptr: myNativeLibraryGetVersion,

});

getVersion();

getVersion.close();linkSymbols is like CFunction except for when there are multiple functions. It returns the same object as dlopen except ptr is required and there is no path

import { linkSymbols } from "bun:ffi";

const [majorPtr, minorPtr, patchPtr] = getVersionPtrs();

const lib = linkSymbols({

// Unlike with dlopen(), the names here can be whatever you want

getMajor: {

returns: "cstring",

args: [],

// Since this doesn't use dlsym(), you have to provide a valid ptr

// That ptr could be a number or a bigint

// An invalid pointer will crash your program.

ptr: majorPtr,

},

getMinor: {

returns: "cstring",

args: [],

ptr: minorPtr,

},

getPatch: {

returns: "cstring",

args: [],

ptr: patchPtr,

},

});

const [major, minor, patch] = [

lib.symbols.getMajor(),

lib.symbols.getMinor(),

lib.symbols.getPatch(),

];new CString(ptr) should be a little faster due to using a more optimized function for getting the length of a string.

require.resolve()

Running require.resolve("my-module") in Bun.js will now resolve the path to the module. Previously, this was not supported.

In browsers, it becomes the absolute filepath at build-time. In node, it's left in without any changes.

Internally, Bun's JavaScript transpiler transforms it to:

// input:

require.resolve("my-module");

// output

import.meta.resolveSync("my-module");You can see this for yourself by running bun build ./file.js --platform=bun

"node:module" module polyfill

Node's "module" module lets you create require functions from ESM modules.

Bun now has a polyfill that implements a subset of the "module" module.

Normally require() in bun transforms statically at build-time to an ESM import statement. That doesn't work as well for Node-API (napi) modules because they cannot be statically analyzed by a JavaScript parser (since they're not JavaScript).

For napi modules, bun uses a dynamic require function and the "module" module exports a way to create those using the same interface as in Node.js

import { createRequire } from "module";

// this also works:

//import {createRequire} from 'node:module';

var require = createRequire(import.meta.url);

require.resolve("my-module");

// dynamic require is supported for:

// - .json files

// - .node files (napi modules)

require("my-napi-module");This is mostly intended for improving Node-API compatibility with modules loaded from ESM.

As an extra thing, you can also use require() this way for .json files.

Bun.Transpiler – pass objects to macros

Bun.Transpiler now supports passing objects to macros.

import { Transpiler } from "bun";

import { parseCookie } from "my-cookie-lib";

import { Database } from "bun:sqlite";

const transpiler = new Transpiler();

const db = new Database("mydb.sqlite");

export default {

fetch(req) {

const transpiled = transpiler.transformSync(

`

import {getUser} from 'macro:./get-user';

export function Hello({name}) {

return <div>Hello {name}</div>;

}

export const HelloCurrentUser = <Hello {...getUser()} />;

`,

// passing contextual data to Bun.Transpiler

{

userId: parseCookie(req.headers.get("Cookie")).userId,

db: db,

}

);

return new Response(transpiled, {

headers: { "Content-Type": "application/javascript" },

});

},

};Then, in get-user.js:

// db, userId is now accessible in macros

export function getUser(expr, { db, userId }) {

// we can use it to query the database while transpiling

return db.query("SELECT * FROM users WHERE id = ? LIMIT 1").get(userId);

}That inlines the returned current user into the JavaScript source code, producing output equivalent to this:

export function Hello({ name }) {

return <div>Hello {name}</div>;

}

// notice that the current user is inlined rather than a function call

export const HelloCurrentUser = <Hello name="Jarred" />;Bug fixes

Buffer.from(arrayBuffer, byteOffset, length)now works as expected (thanks to @kriszyp for reporting)

Misc

- Receiving and sending strings to Node-API modules should be a little faster

bun v0.0.81

To upgrade:

bun upgradeBun.js gets Node-API support

Node-API is 1.75x - 3x faster in Bun compared to Node.js 18 (in call overhead)

Just like in Node.js, to load a Node-API module, use require('my-npm-package') or use process.dlopen.

90% of the API is implemented, though it is certainly buggy right now.

Polyfills & new APIs in Bun.js v0.0.81

The following functions have been added:

import.meta.resolveSyncsynchronously run the module resolver for the currently-referenced fileimport.meta.requiresynchronously loads.nodeor.jsonmodules and works with dynamic paths. This doesn't use ESM and doesn't run the transpiler, which is why regular js files are not supported. This is mostly an implementation detail for howrequireworks for Node-API modules, but it could also be used outside of that if you wantBun.gzipSync,Bun.gunzipSync,Bun.inflateSync, andBun.deflateSyncwhich expose native bindings tozlib-cloudflare. On macOS aarch64,gzipSyncis ~3x faster than in Node. This isn't wired up to the"zlib"polyfill in bun yet

Additionally:

__dirnameis now supported for all targets (including browsers)__filenameis now supported for all targets (including browsers)Buffer.byteLengthis now implemented

Several packages using Node-API also use detect-libc. Bun polyfills detect-libc because bun doesn't support child_process yet and this improves performance a little.

Bug fixes

bun v0.0.79

To upgrade:

bun upgradeIf you run into any issues with upgrading

Try running this:

curl https://bun.sh/install | bashHighlights

"bun:ffi"is a new bun.js core module that lets you use third-party native libraries written in languages that support the C ABI (Zig, Rust, C/C++ etc). It's like a foreign function interface API but fasterBuffer(like in Node.js) is now a global, but the implementation is incomplete - see tracking issue. If you import"buffer", it continues to use the browser polyfill so this shouldn't be a breaking change- 2x faster

TextEncoder&TextDecoderthanks to some fixes to the vectorization (SIMD) code - Faster

TypedArray.from. JavaScriptCore's implementation ofTypedArray.fromuses the code path for JS iterators when it could instead use an optimized code path for copying elements from an array, like V8 does. I have filed an upstream bug with WebKit about this, but I expect to do a more thorough fix for this in Bun and upstream that. For now, Bun reusesTypedArray.prototype.setwhen possible - 17x faster

Uint8Array.fill Bun.Transpilergets an API for removing & replacing exportsSHA512,SHA256,SHA128, and more are now exposed in the"bun"module and theBunglobal. They use BoringSSL's optimized hashing functions.- Fixed a reliability bug with

new Response(Bun.file(path)) - Bun's HTTP server now has a

stop()function. Before, there was no way to stop it without terminating the process 😆 - @evanwashere expose mmap size and offset option

- @jameslahm [node] Add more fs constants

The next large project for bun is a production bundler Tracking issue

New Contributors

- @jameslahm made their first contribution in https://github.com/Jarred-Sumner/bun/pull/144

- @lawrencecchen made their first contribution in https://github.com/Jarred-Sumner/bun/pull/151

bun:ffi

The "bun:ffi" core module lets you efficiently call native libraries from JavaScript. It works with languages that support the C ABI (Zig, Rust, C/C++, C#, Nim, Kotlin, etc).

Get the locally-installed SQLite version number:

import { dlopen, CString, ptr, suffix, FFIType } from "bun:ffi";

const sqlite3Path = process.env.SQLITE3_PATH || `libsqlite3.${suffix}`;

const {

symbols: { sqlite3_libversion },

} = dlopen(sqlite3Path, {

sqlite3_libversion: {

returns: "cstring",

},

});

console.log("SQLite version", sqlite3_libversion());FFI is really exciting because there is no runtime-specific code. You don't have to write a Bun FFI module (that isn't a thing). Use JavaScript to write bindings to native libraries installed with homebrew, with your linux distro's package manager or elsewhere. You can also write bindings to your own native code.

FFI has a reputation of being slower than runtime-specific APIs like napi – but that's not true for bun:ffi.

Bun embeds a small C compiler that generates code on-demand and converts types between JavaScript & native code inline. A lot of overhead in native libraries comes from function calls that validate & convert types, so moving that to just-in-time compiled C using engine-specific implementation details makes that faster. Those C functions are called directly – there is no extra wrapper in the native code side of things.

Some bun:ffi usecases:

- SQLite client

- Skia bindings so you can use Canvas API in bun.js

- Clipboard api

- Fast ffmpeg recording/streaming

- Postgres client (possibly)

- Use Pythons

"ndarray"package from JavaScript (ideally via ndarray's C API and not just embedding Python in bun)

Later (not yet), bun:ffi will be integrated with bun's bundler and that will enable things like:

- Use hermes to strip Flow types for code transpiled in bun

- .sass support

Buffer

A lot of Node.js' Buffer module is now implemented natively in Bun.js, but it's not complete yet.

Here is a comparison of how long various functions take.

Replace & eliminate exports with Bun.Transpiler

For code transpiled with Bun.Transpiler, you can now remove and/or replace exports with a different value.

const transpiler = new Bun.Transpiler({

exports: {

replace: {

// Next.js does this

getStaticProps: ["__N_SSG", true],

},

eliminate: ["localVarToRemove"],

},

treeShaking: true,

trimUnusedImports: true,

});

const code = `

import fs from "fs";

export var localVarToRemove = fs.readFileSync("/etc/passwd");

import * as CSV from "my-csv-parser";

export function getStaticProps() {

return {

props: { rows: CSV.parse(fs.readFileSync("./users-list.csv", "utf8")) },

};

}

export function Page({ rows }) {

return (

<div>

<h1>My page</h1>

<p>

<a href="/about">About</a>

</p>

<p>

<a href="/users">Users</a>

</p>

<div>

{rows.map((columns, index) => (

<span key={index}>{columns.join(" | ")} </span>

))}

</div>

</div>

);

}

`;

console.log(transpiler.transformSync(code));Which outputs (this is the automatic react transform)

export var __N_SSG = true;

export function Page({ rows }) {

return jsxDEV("div", {

children: [

jsxDEV("h1", {

children: "My page"

}, undefined, false, undefined, this),

jsxDEV("p", {

children: jsxDEV("a", {

href: "/about",

children: "About"

}, undefined, false, undefined, this)

}, undefined, false, undefined, this),

jsxDEV("p", {

children: jsxDEV("a", {

href: "/users",

children: "Users"

}, undefined, false, undefined, this)

}, undefined, false, undefined, this),

jsxDEV("div", {

children: rows.map((columns, index) => jsxDEV("span", {

children: [

columns.join(" | "),

" "

]

}, index, true, undefined, this))

}, undefined, false, undefined, this)

]

}, undefined, true, undefined, this);

}More new stuff

server.stop()lets you stop bun's HTTP server- [bun.js] Add Bun.nanoseconds() to report time in nanos

Hashing functions powered by BoringSSL:

import {

SHA1,

MD5,

MD4,

SHA224,

SHA512,

SHA384,

SHA256,

SHA512_256,

} from "bun";

// hash the string and return as a Uint8Array

SHA1.hash("123456");

MD5.hash("123456");

MD4.hash("123456");

SHA224.hash("123456");

SHA512.hash("123456");

SHA384.hash("123456");

SHA256.hash("123456");

SHA512_256.hash("123456");

// output as a hex string

SHA1.hash(new Uint8Array(42), "hex");

MD5.hash(new Uint8Array(42), "hex");

MD4.hash(new Uint8Array(42), "hex");

SHA224.hash(new Uint8Array(42), "hex");

SHA512.hash(new Uint8Array(42), "hex");

SHA384.hash(new Uint8Array(42), "hex");

SHA256.hash(new Uint8Array(42), "hex");

SHA512_256.hash(new Uint8Array(42), "hex");

// incrementally update the hashing function value and convert it at the end to a hex string

// similar to node's API in require('crypto')

// this is not wired up yet to bun's "crypto" polyfill, but it really should be

new SHA1().update(new Uint8Array(42)).digest("hex");

new MD5().update(new Uint8Array(42)).digest("hex");

new MD4().update(new Uint8Array(42)).digest("hex");

new SHA224().update(new Uint8Array(42)).digest("hex");

new SHA512().update(new Uint8Array(42)).digest("hex");

new SHA384().update(new Uint8Array(42)).digest("hex");

new SHA256().update(new Uint8Array(42)).digest("hex");

new SHA512_256().update(new Uint8Array(42)).digest("hex");Reliability improvements

bun v0.0.78

To upgrade:

bun upgradeWhat's new:

You can now import from "bun" in bun.js. You can still use the global Bun.

Before:

await Bun.write("output.txt", Bun.file("input.txt"))After:

import {write, file} from 'bun';

await write("output.txt", file("input.txt"))This isn't a breaking change – you can still use Bun as a global same as before.

How it works

Bun's JavaScript printer replaces the "bun" import specifier with globalThis.Bun.

var {write, file} = globalThis.Bun;

await write("output.txt", file("input.txt"))You'll probably want to update types too:

bun add bun-typesBug fixes

- [fs] Add missing

isFileandisDirectoryfunctions tofs.stat()

7cd3d13 - [js parser] Fix a code simplification bug that could happen when using

!and comma operator - 4de7978 - [bun bun] Fix using

bun bunwith--platform=bunset - 43b1866

Bug fixes from v0.0.77

- [bun dev] Fix race condition in file watcher

- [bun install] Fix falling back to copyfile when hardlink fails on linux due to differing filesystems - 74309a1

- [Bun.serve] When a

Bun.fileis not found and the error handler is not run, the default status code is now 404 - [Bun.serve] Fix hanging when

Bun.filesent with sendfile and the request aborts or errors - [Bun.serve] Decrement the reference count for

FileBlobsent via sendfile after the callback completes instead of before - [Bun.serve] Serve

.tsand.tsxfiles withtext/javascriptmime type by default instead of MPEG2 Video

bun v0.0.76

To upgrade:

bun upgradeWhat's new

- Type definitions for better editor integration and TypeScript LSP! There are types for the runtime APIs now in the

bun-typesnpm package. A PR for @types/bun is waiting review (feel free to nudge them). Bun.serve()is a fast HTTP & HTTPS server that supports theRequestandResponseWeb APIs. The server is a fork of uWebSocketsBun.write()– one API for writing files, pipes, and copying files leveraging the fastest system calls available for the input & platform. It uses theBlobWeb API.Bun.mmap(path)lets you read files as a live-updatingUint8Arrayvia the mmap(2) syscall. Thank you @evanwashere!!Bun.hash(bufferOrString)exposes fast non-cryptographic hashing functions. Useful for things likeETag, not for passwords.Bun.allocUnsafe(length)creates a new Uint8Array ~3.5x faster than new Uint8Array, but it is not zero-initialized. This is similar to Node'sBuffer.allocUnsafe, though without the memory pool currently- Several web APIs have been added, including

URL - A few more examples have been added to the

examplesfolder - Bug fixes to

fs.read()andfs.write()and some more tests for fs Response.redirect(),Response.json(), andResponse.error()have been addedimport.meta.urlis now a file:// url stringBun.resolveandBun.resolveSynclet you resolve the same asimportdoes. It throws aResolveErroron failure (same asimport)Bun.stderrandBun.stdoutnow return aBlobSharedArrayBufferis now enabled (thanks @evanwashere!)- Updated Next.js version

New Web APIs in bun.js

Going forward, Bun will first try to rely on WebKit/Safari's implementations of Web APIs rather than writing new ones. This will improve Web API compatibility while reducing bun's scope, without compromising performance

These Web APIs are now available in bun.js and powered by Safari's implementation:

URLURLSearchParamsErrorEventEventEventTargetDOMExceptionHeadersuses WebKit's implementation now instead of a custom oneAbortSignal(not wired up to fetch or fs yet, it exists but not very useful)AbortController

Also added:

reportError(does not dispatch an"error"event yet)

Additionally, all the builtin constructors in bun now have a .prototype property (this was missing before)

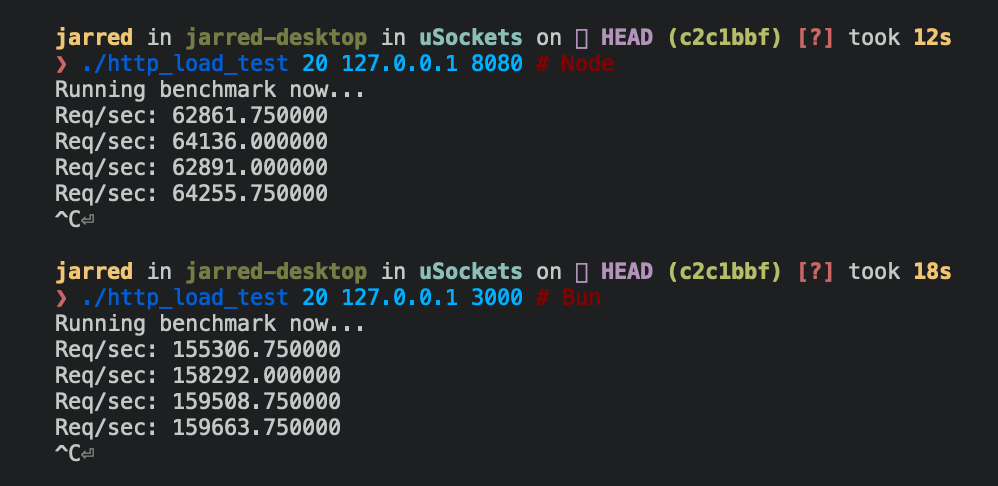

Bun.serve - fast HTTP server

For a hello world HTTP server that writes "bun!", Bun.serve serves about 2.5x more requests per second than node.js on Linux:

| Requests per second | Runtime |

|---|---|

| ~64,000 | Node 16 |

| ~160,000 | Bun |

Bigger is better

Code

Bun:

Bun.serve({

fetch(req: Request) {

return new Response(`bun!`);

},

port: 3000,

});Node:

require("http")

.createServer((req, res) => res.end("bun!"))

.listen(8080);

Usage

Two ways to start an HTTP server with bun.js:

export defaultan object with afetchfunction

If the file used to start bun has a default export with a fetch function, it will start the http server.

// hi.js

export default {

fetch(req) {

return new Response("HI!");

},

};

// bun ./hi.jsfetch receives a Request object and must return either a Response or a Promise<Response>. In a future version, it might have an additional arguments for things like cookies.

Bun.servestarts the http server explicitly

Bun.serve({

fetch(req) {

return new Response("HI!");

},

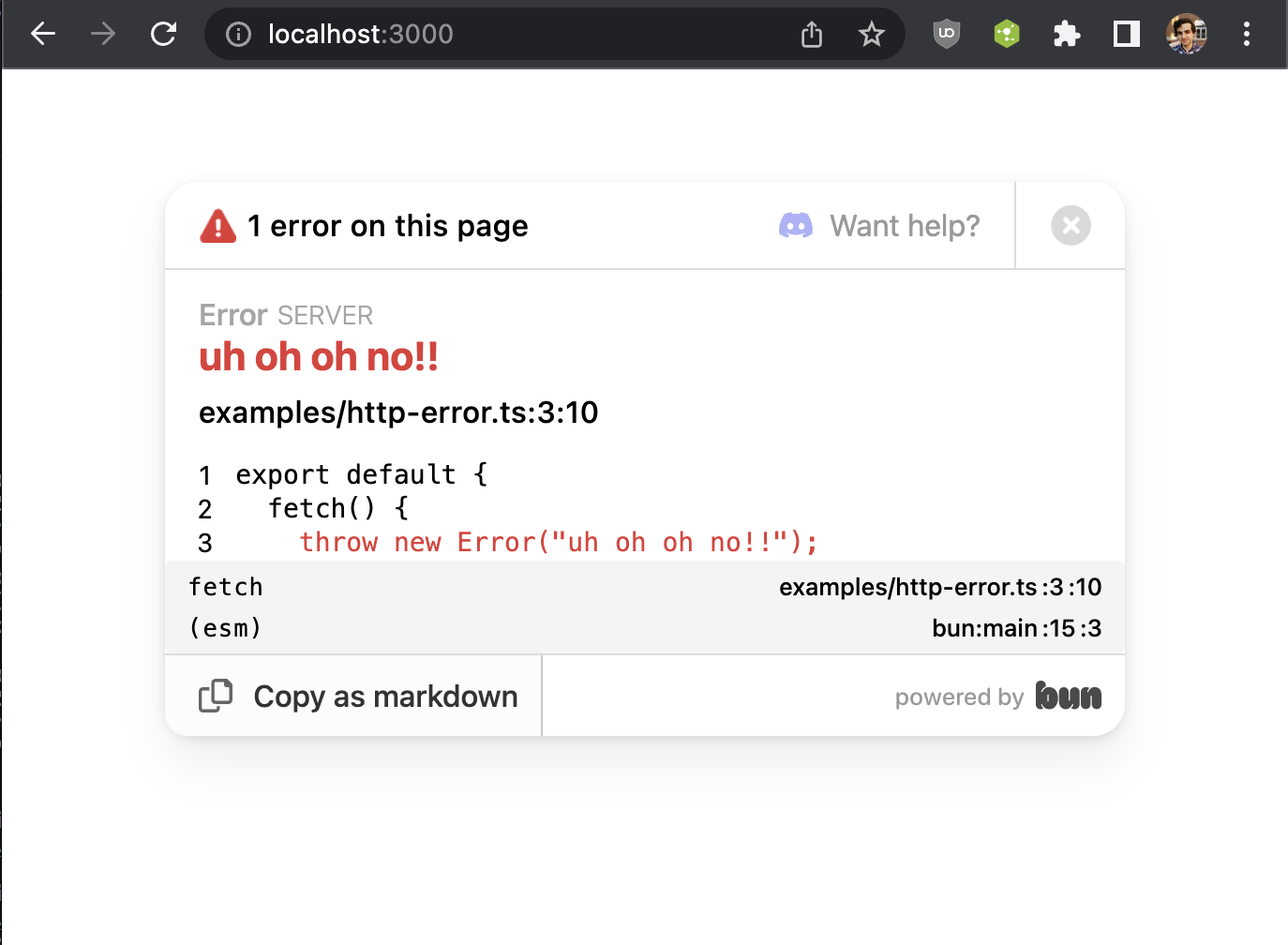

});Error handling

For error handling, you get an error function.

If development: true and error is not defined or doesn't return a Response, you will get an exception page with a stack trace:

It will hopefully make it easier to debug issues with bun until bun gets debugger support. This error page is based on what bun dev does.

If the error function returns a Response, it will be served instead

Bun.serve({

fetch(req) {

throw new Error("woops!");

},

error(error: Error) {

return new Response("Uh oh!!\n" + error.toString(), { status: 500 });

},

});If the error function itself throws and development is false, a generic 500 page will be shown

Currently, there is no way to stop the HTTP server once started 😅, but that will be added in a future version.

The interface for Bun.serve is based on what Cloudflare Workers does.

Bun.write() – optimizing I/O

Bun.write lets you write, copy or pipe files automatically using the fastest system calls compatible with the input and platform.

interface Bun {

write(

destination: string | number | FileBlob,

input: string | FileBlob | Blob | ArrayBufferView

): Promise<number>;

}| Output | Input | System Call | Platform |

|---|---|---|---|

| file | file | copy_file_range | Linux |

| file | pipe | sendfile | Linux |

| pipe | pipe | splice | Linux |

| terminal | file | sendfile | Linux |

| terminal | terminal | sendfile | Linux |

| socket | file or pipe | sendfile (if http, not https) | Linux |

| file (path, doesn't exist) | file (path) | clonefile | macOS |

| file | file | fcopyfile | macOS |

| file | Blob or string | write | macOS |

| file | Blob or string | write | Linux |

All this complexity is handled by a single function.

// Write "Hello World" to output.txt

await Bun.write("output.txt", "Hello World");// log a file to stdout

await Bun.write(Bun.stdout, Bun.file("input.txt"));// write the HTTP response body to disk

await Bun.write("index.html", await fetch("http://example.com"));

// this does the same thing

await Bun.write(Bun.file("index.html"), await fetch("http://example.com"));// copy input.txt to output.txt

await Bun.write("output.txt", Bun.file("input.txt"));Bug fixes

- Fixed a bug on Linux where sometimes the HTTP thread would incorrectly go to sleep, causing requests to hang forever 😢 . Previously, it relied on data in the queues to determine whether it should idle and now it increments a counter.

- Fixed a bug that sometimes caused

requireto produce incorrect output depending on how the module was used - Fixed a number of crashes in

bun devrelated to HMR & websockets - Jarred-Sumner/bun@daeede2 fs.openSyncnow supportsmodeandflags- Jarred-Sumner/bun@c73fcb0fs.readandfs.writewere incorrectly returning the output offs/promisesversions, this is fixed- Fixed a crash that could occur during garbage collection when an

fsfunction received a TypedArray as input. - Jarred-Sumner/bun@614f64b. This also improves performance of sending array buffers to native a little Response's constructor previously readstatusCodeinstead ofstatus. This was incorrect and has been fixed.- Fixed a bug where

fs.statreported incorrect information on macOS x64 - Slight improvement to HMR reliability if reading large files or slow filesystems over websockets - 89cd35f

- Fixed a potential infinite loop when generating a sourcemap - fe973a5

improve performance of accessingBun.TranspilerandBun.unsafe29a759a