diff --git a/README.md b/README.md

index c6e9e53855..5ed611dd72 100644

--- a/README.md

+++ b/README.md

@@ -1,98 +1,83 @@

-

+

](https://join.slack.com/t/openfl/shared_invite/zt-ovzbohvn-T5fApk05~YS_iZhjJ5yaTw)

-[](https://opensource.org/licenses/Apache-2.0)

-[](https://arxiv.org/abs/2105.06413)

[](https://bestpractices.coreinfrastructure.org/projects/6599)

](https://join.slack.com/t/openfl/shared_invite/zt-ovzbohvn-T5fApk05~YS_iZhjJ5yaTw)

-[](https://opensource.org/licenses/Apache-2.0)

-[](https://arxiv.org/abs/2105.06413)

[](https://bestpractices.coreinfrastructure.org/projects/6599)

-[](https://colab.research.google.com/github/intel/openfl/blob/develop/openfl-tutorials/Federated_Pytorch_MNIST_Tutorial.ipynb)

-

-Open Federated Learning (OpenFL) is a Python 3 framework for Federated Learning. OpenFL is designed to be a _flexible_, _extensible_ and _easily learnable_ tool for data scientists. OpenFL is hosted by The Linux Foundation, aims to be community-driven, and welcomes contributions back to the project.

-Looking for the Open Flash Library project also referred to as OpenFL? Find it [here](https://github.com/openfl/openfl)!

-

-## Installation

+[**Overview**](#overview)

+| [**Features**](#features)

+| [**Installation**](#installation)

+| [**Changelog**](https://openfl.readthedocs.io/en/latest/releases.html)

+| [**Documentation**](https://openfl.readthedocs.io/en/latest/)

-You can simply install OpenFL from PyPI:

+OpenFL is a Python framework for Federated Learning. It enables organizations to train and validate machine learning models on sensitive data. It increases privacy by allowing collaborative model training or validation across local private datasets without ever sharing that data with a central server. OpenFL is hosted by The Linux Foundation.

-```

-$ pip install openfl

-```

-For more installation options check out the [online documentation](https://openfl.readthedocs.io/en/latest/get_started/installation.html).

+## Overview

-## Getting Started

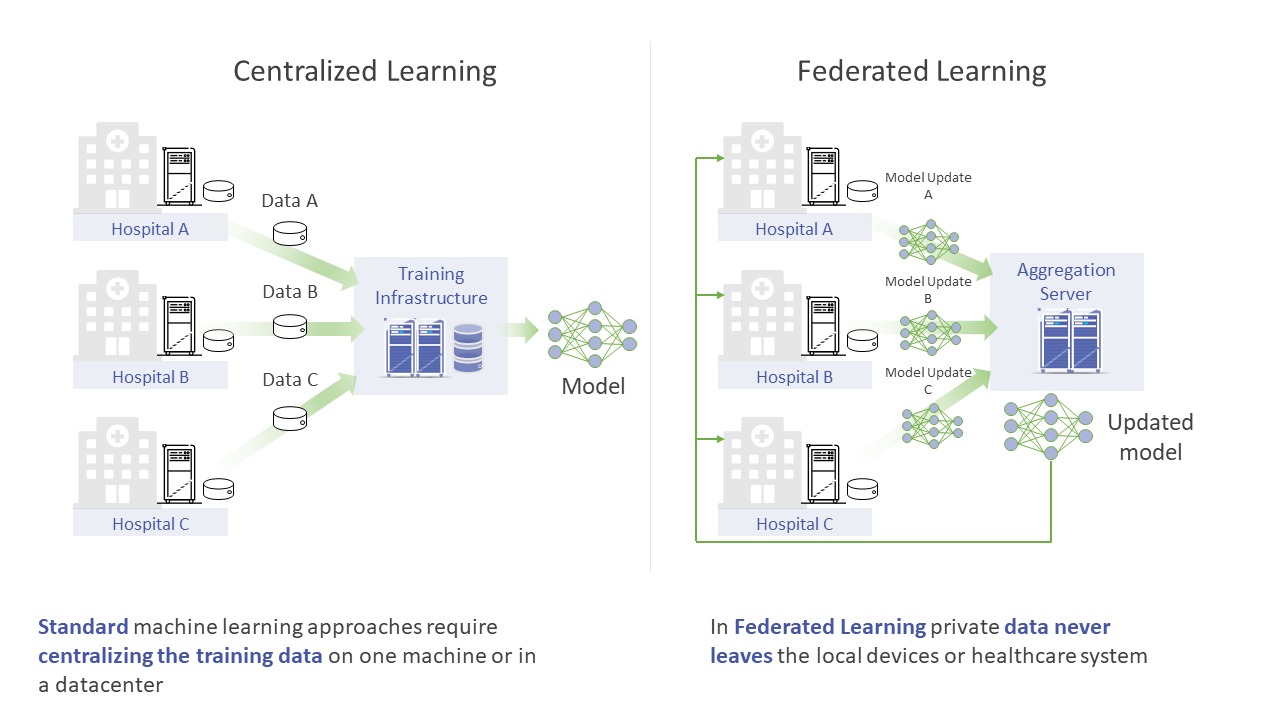

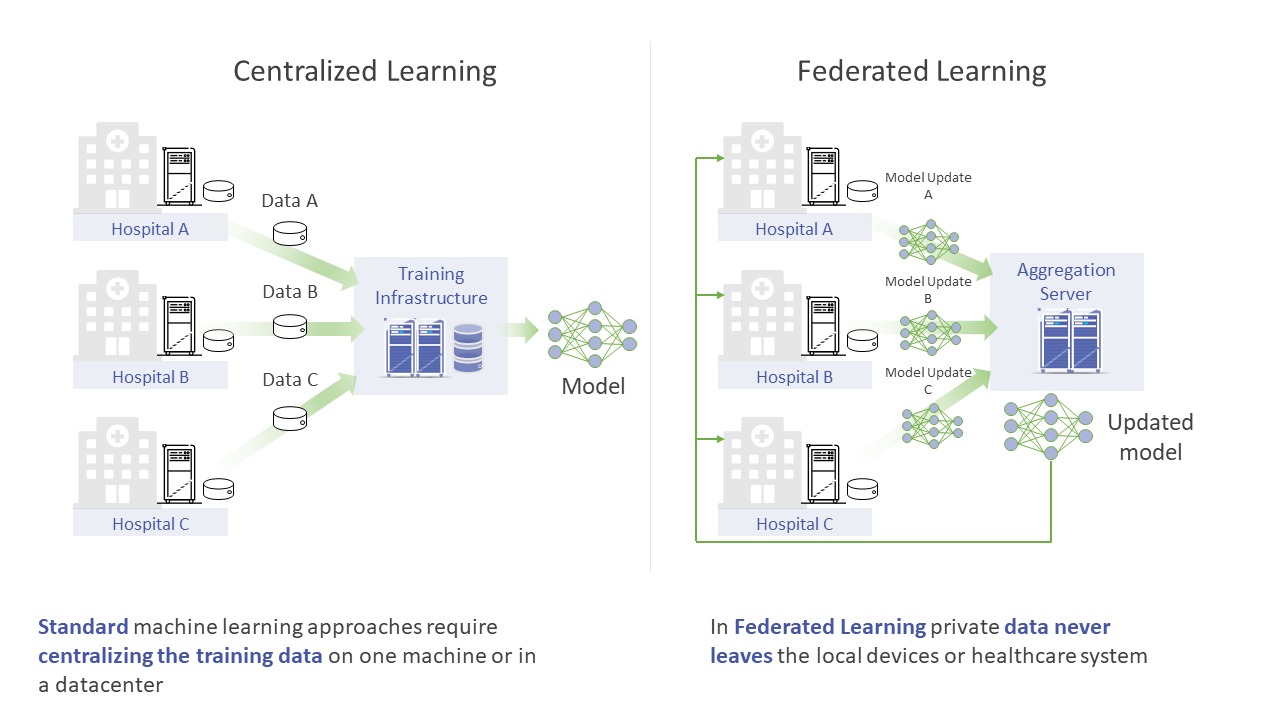

+Federated Learning is a distributed machine learning approach that enables collaborative training and evaluation of models without sharing sensitive data such as, personal information, patient records, financial data, or classified information. The minimum data movement needed across a Federated Training experiment, is solely the model parameters and their updates. This is in contrast to a Centralized Learning regime, where all data needs to be moved to a central server or a datacenter for massively parallel training.

-OpenFL supports two APIs to set up a Federated Learning experiment:

+

-- [Task Runner API](https://openfl.readthedocs.io/en/latest/about/features_index/taskrunner.html):

-Define an experiment and distribute it manually. All participants can verify model code and [FL plan](https://openfl.readthedocs.io/en/latest/about/features_index/taskrunner.html#federated-learning-plan-fl-plan-settings) prior to execution. The federation is terminated when the experiment is finished. This API is meant for enterprise-grade FL experiments, including support for mTLS-based communication channels and TEE-ready nodes (based on Intel® SGX). Follow the [Quick Start Guide](https://openfl.readthedocs.io/en/latest/get_started/quickstart.html#quick-start) for launching your first FL experiment locally. Then, refer to the [TaskRunner API Tutorial](https://github.com/securefederatedai/openfl/tree/develop/openfl-workspace/torch_cnn_mnist/README.md) for customizing the example workspace to your specific needs.

-[](https://colab.research.google.com/github/intel/openfl/blob/develop/openfl-tutorials/Federated_Pytorch_MNIST_Tutorial.ipynb)

-

-Open Federated Learning (OpenFL) is a Python 3 framework for Federated Learning. OpenFL is designed to be a _flexible_, _extensible_ and _easily learnable_ tool for data scientists. OpenFL is hosted by The Linux Foundation, aims to be community-driven, and welcomes contributions back to the project.

-Looking for the Open Flash Library project also referred to as OpenFL? Find it [here](https://github.com/openfl/openfl)!

-

-## Installation

+[**Overview**](#overview)

+| [**Features**](#features)

+| [**Installation**](#installation)

+| [**Changelog**](https://openfl.readthedocs.io/en/latest/releases.html)

+| [**Documentation**](https://openfl.readthedocs.io/en/latest/)

-You can simply install OpenFL from PyPI:

+OpenFL is a Python framework for Federated Learning. It enables organizations to train and validate machine learning models on sensitive data. It increases privacy by allowing collaborative model training or validation across local private datasets without ever sharing that data with a central server. OpenFL is hosted by The Linux Foundation.

-```

-$ pip install openfl

-```

-For more installation options check out the [online documentation](https://openfl.readthedocs.io/en/latest/get_started/installation.html).

+## Overview

-## Getting Started

+Federated Learning is a distributed machine learning approach that enables collaborative training and evaluation of models without sharing sensitive data such as, personal information, patient records, financial data, or classified information. The minimum data movement needed across a Federated Training experiment, is solely the model parameters and their updates. This is in contrast to a Centralized Learning regime, where all data needs to be moved to a central server or a datacenter for massively parallel training.

-OpenFL supports two APIs to set up a Federated Learning experiment:

+

-- [Task Runner API](https://openfl.readthedocs.io/en/latest/about/features_index/taskrunner.html):

-Define an experiment and distribute it manually. All participants can verify model code and [FL plan](https://openfl.readthedocs.io/en/latest/about/features_index/taskrunner.html#federated-learning-plan-fl-plan-settings) prior to execution. The federation is terminated when the experiment is finished. This API is meant for enterprise-grade FL experiments, including support for mTLS-based communication channels and TEE-ready nodes (based on Intel® SGX). Follow the [Quick Start Guide](https://openfl.readthedocs.io/en/latest/get_started/quickstart.html#quick-start) for launching your first FL experiment locally. Then, refer to the [TaskRunner API Tutorial](https://github.com/securefederatedai/openfl/tree/develop/openfl-workspace/torch_cnn_mnist/README.md) for customizing the example workspace to your specific needs.

+OpenFL builds on a collaboration between Intel and the Bakas lab at the University of Pennsylvania (UPenn) to develop the [Federated Tumor Segmentation (FeTS)](https://www.fets.ai/) platform (grant award number: U01-CA242871).

-- [Workflow API](https://openfl.readthedocs.io/en/latest/about/features_index/workflowinterface.html) ([*experimental*](https://openfl.readthedocs.io/en/latest/developer_guide/experimental_features.html)):

-Create complex experiments that extend beyond traditional horizontal federated learning. This API enables an experiment to be simulated locally, then seamlessly scaled to a federated setting. See the [experimental tutorials](https://github.com/securefederatedai/openfl/blob/develop/openfl-tutorials/experimental/workflow/) to learn how to coordinate [aggregator validation after collaborator model training](https://github.com/securefederatedai/openfl/tree/develop/openfl-tutorials/experimental/workflow/102_Aggregator_Validation.ipynb), [perform global differentially private federated learning](https://github.com/psfoley/openfl/tree/experimental-workflow-interface/openfl-tutorials/experimental/workflow/Global_DP), measure the amount of private information embedded in a model after collaborator training with [privacy meter](https://github.com/securefederatedai/openfl/blob/develop/openfl-tutorials/experimental/workflow/Privacy_Meter/readme.md), or [add a watermark to a federated model](https://github.com/securefederatedai/openfl/blob/develop/openfl-tutorials/experimental/workflow/301_MNIST_Watermarking.ipynb).

+The grant for FeTS was awarded from the [Informatics Technology for Cancer Research (ITCR)](https://itcr.cancer.gov/) program of the National Cancer Institute (NCI) of the National Institutes of Health (NIH), to Dr. Spyridon Bakas (Principal Investigator) when he was affiliated with the [Center for Biomedical Image Computing and Analytics (CBICA)](https://www.cbica.upenn.edu/) at UPenn and now heading the [Division of Computational Pathology at Indiana University (IU)](https://medicine.iu.edu/pathology/research/computational-pathology).

-## Requirements

+FeTS is a real-world medical federated learning platform with international collaborators. The original OpenFederatedLearning project and OpenFL are designed to serve as the backend for the FeTS platform, and OpenFL developers and researchers continue to work very closely with IU on the FeTS project. An example is the [FeTS-AI/Front-End](https://github.com/FETS-AI/Front-End), which integrates the group’s medical AI expertise with OpenFL framework to create a federated learning solution for medical imaging.

-OpenFL supports popular NumPy-based ML frameworks like TensorFlow, PyTorch and Jax which should be installed separately.

-Users can extend the list of supported Machine Learning frameworks if needed.

+Although initially developed for use in medical imaging, OpenFL designed to be agnostic to the use-case, the industry, and the machine learning framework.

-## Project Overview

-### What is Federated Learning

+For more information, here is a list of relevant [publications](https://openfl.readthedocs.io/en/latest/about/blogs_publications.html).

-[Federated learning](https://en.wikipedia.org/wiki/Federated_learning) is a distributed machine learning approach that enables collaboration on machine learning projects without having to share sensitive data, such as, patient records, financial data, or classified information. The minimum data movement needed across the federation is solely the model parameters and their updates.

+## Installation

-

+Install via PyPI (latest stable release):

+```

+pip install -U openfl

+```

+For more installation options, checkout the [installation guide](https://openfl.readthedocs.io/en/latest/installation.html).

-### Background

-OpenFL builds on a collaboration between Intel and the Bakas lab at the University of Pennsylvania (UPenn) to develop the [Federated Tumor Segmentation (FeTS, www.fets.ai)](https://www.fets.ai/) platform (grant award number: U01-CA242871).

+## Features

-The grant for FeTS was awarded from the [Informatics Technology for Cancer Research (ITCR)](https://itcr.cancer.gov/) program of the National Cancer Institute (NCI) of the National Institutes of Health (NIH), to Dr Spyridon Bakas (Principal Investigator) when he was affiliated with the [Center for Biomedical Image Computing and Analytics (CBICA)](https://www.cbica.upenn.edu/) at UPenn and now heading up the [Division of Computational Pathology at Indiana University (IU)](https://medicine.iu.edu/pathology/research/computational-pathology).

+### Ways to set up an FL experiment

+OpenFL supports two ways to set up a Federated Learning experiment:

-FeTS is a real-world medical federated learning platform with international collaborators. The original OpenFederatedLearning project and OpenFL are designed to serve as the backend for the FeTS platform, and OpenFL developers and researchers continue to work very closely with IU on the FeTS project. An example is the [FeTS-AI/Front-End](https://github.com/FETS-AI/Front-End), which integrates the group’s medical AI expertise with OpenFL framework to create a federated learning solution for medical imaging.

+- [TaskRunner API](https://openfl.readthedocs.io/en/latest/about/features_index/taskrunner.html): This API uses short-lived components like the `Aggregator` and `Collaborator`, which terminate at the end of an FL experiment. TaskRunner supports mTLS-based secure communication channels, and TEE-based confidential computing environments.

-Although initially developed for use in medical imaging, OpenFL designed to be agnostic to the use-case, the industry, and the machine learning framework.

+- [Workflow API](https://openfl.readthedocs.io/en/latest/about/features_index/workflowinterface.html): This API allows for experiments beyond the traditional horizontal federated learning paradigm using a pythonic interface. It allows an experiment to be simulated locally, and then to be seamlessly scaled to a federated setting by switching from a local runtime to a distributed, federated runtime.

+ > **Note:** This is experimental capability.

-You can find more details in the following articles:

-- [Pati S, et al., 2022](https://www.nature.com/articles/s41467-022-33407-5)

-- [Reina A, et al., 2021](https://arxiv.org/abs/2105.06413)

-- [Sheller MJ, et al., 2020](https://www.nature.com/articles/s41598-020-69250-1)

-- [Sheller MJ, et al., 2019](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6589345)

-- [Yang Y, et al., 2019](https://arxiv.org/abs/1902.04885)

-- [McMahan HB, et al., 2016](https://arxiv.org/abs/1602.05629)

+### Framework Compatibility

+OpenFL is backend-agnostic. It comes with support for popular NumPy-based ML frameworks like TensorFlow, PyTorch and Jax which should be installed separately. Users may extend the list of supported backends if needed.

-### Supported Aggregation Algorithms

-| Algorithm Name | Paper | PyTorch implementation | TensorFlow implementation | Other frameworks compatibility |

+### Aggregation Algorithms

+OpenFL supports popular aggregation algorithms out-of-the-box, with more algorithms coming soon.

+| | Reference | PyTorch backend | TensorFlow backend | NumPy backend |

| -------------- | ----- | :--------------------: | :-----------------------: | :----------------------------: |

-| FedAvg | [McMahan et al., 2017](https://arxiv.org/pdf/1602.05629.pdf) | ✅ | ✅ | ✅ |

-| FedProx | [Li et al., 2020](https://arxiv.org/pdf/1812.06127.pdf) | ✅ | ✅ | ❌ |

-| FedOpt | [Reddi et al., 2020](https://arxiv.org/abs/2003.00295) | ✅ | ✅ | ✅ |

-| FedCurv | [Shoham et al., 2019](https://arxiv.org/pdf/1910.07796.pdf) | ✅ | ❌ | ❌ |

-

-## Support

-The OpenFL community is growing, and we invite you to be a part of it. Join the [Slack channel](https://join.slack.com/t/openfl/shared_invite/zt-ovzbohvn-T5fApk05~YS_iZhjJ5yaTw) to connect with fellow enthusiasts, share insights, and contribute to the future of federated learning.

+| FedAvg | [McMahan et al., 2017](https://arxiv.org/pdf/1602.05629.pdf) | yes | yes | yes |

+| FedOpt | [Reddi et al., 2020](https://arxiv.org/abs/2003.00295) | yes | yes | yes |

+| FedProx | [Li et al., 2020](https://arxiv.org/pdf/1812.06127.pdf) | yes | yes | - |

+| FedCurv | [Shoham et al., 2019](https://arxiv.org/pdf/1910.07796.pdf) | yes | - | - |

-Consider subscribing to the OpenFL mail list openfl-announce@lists.lfaidata.foundation

+## Contributing

+We welcome contributions! Please refer to the [contributing guidelines](https://openfl.readthedocs.io/en/latest/contributing.html).

-See you there!

+The OpenFL community is expanding, and we encourage you to join us. Connect with other enthusiasts, share knowledge, and contribute to the advancement of federated learning by joining our [Slack channel](https://join.slack.com/t/openfl/shared_invite/zt-ovzbohvn-T5fApk05~YS_iZhjJ5yaTw).

-We also always welcome questions, issue reports, and suggestions via:

+Stay updated by subscribing to the OpenFL mailing list: [openfl-announce@lists.lfaidata.foundation](mailto:openfl-announce@lists.lfaidata.foundation).

-* [GitHub Issues](https://github.com/securefederatedai/openfl/issues)

-* [GitHub Discussions](https://github.com/securefederatedai/openfl/discussions)

## License

This project is licensed under [Apache License Version 2.0](LICENSE). By contributing to the project, you agree to the license and copyright terms therein and release your contribution under these terms.

diff --git a/docs/about/features_index/taskrunner.rst b/docs/about/features_index/taskrunner.rst

index 37e050e5a3..66abcf6868 100644

--- a/docs/about/features_index/taskrunner.rst

+++ b/docs/about/features_index/taskrunner.rst

@@ -7,22 +7,17 @@

TaskRunner API

================

-Let's take a deeper dive into the Task Runner API. If you haven't already, we suggest checking out the :ref:`quick_start` for a primer on doing a simple experiment on a single node.

-

-The steps to transition from a local experiment to a distributed federation can be understood best with the following diagram.

+This is a deep dive into the TaskRunner API. To gain familiarity with this API, we recommend going through the `quickstart <../../tutorials/taskrunner.html>`_ guide. Note that the quickstart guide is focused on simulating an experiment locally. The design choices of this API are best understood when transitioning from a local experiment to a distributed federation, which is how real-world federated learning experiments are conducted.

.. figure:: ../../images/openfl_flow.png

-.. centered:: Overview of a Task Runner experiment distributed across multiple nodes

-:

-

-The Task Runner API uses short-lived components in a federation, which is terminated when the experiment is finished. The components are as follows:

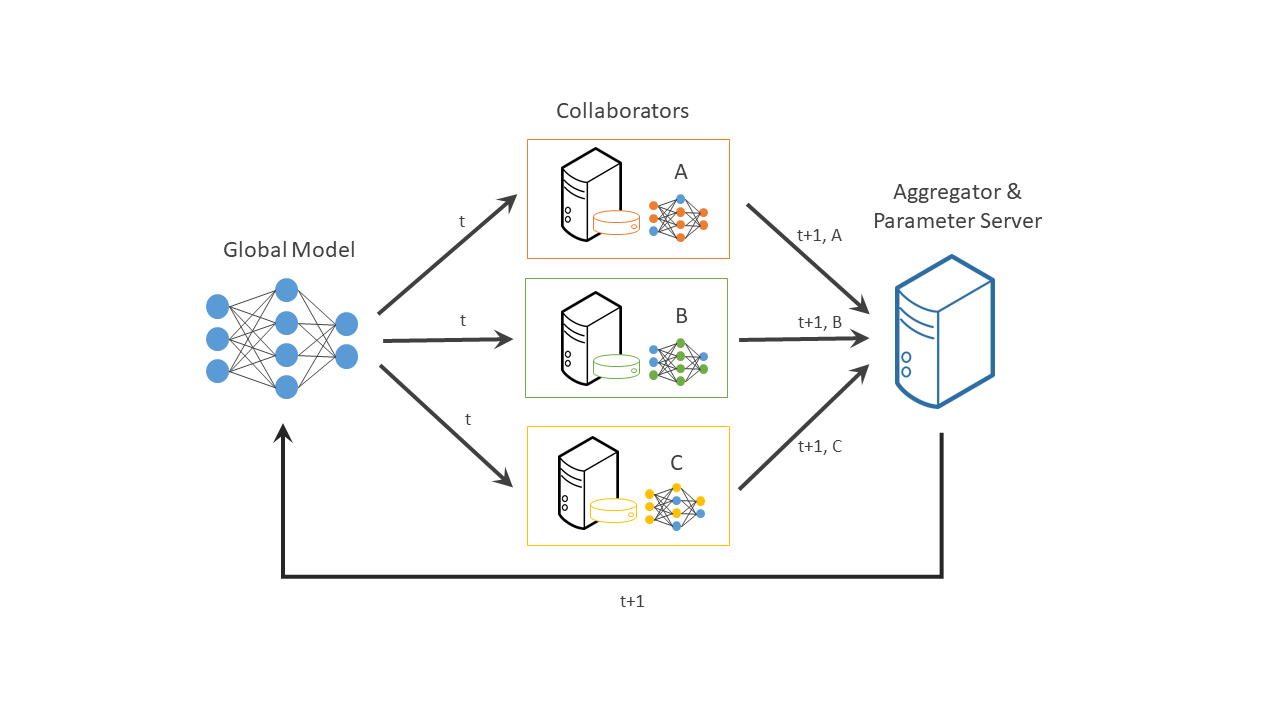

+The Task Runner API uses short-lived components in a federation, which are terminated once the experiment finishes. These components are:

-- The *Collaborator* uses a local dataset to train a global model and the *Aggregator* receives model updates from *Collaborators* and aggregates them to create the new global model.

-- The *Aggregator* is framework-agnostic, while the *Collaborator* can use any deep learning frameworks, such as `TensorFlow `_\* \ or `PyTorch `_\*\.

+- The :code:`Collaborator` uses a local dataset to train a global model and the :code:`Aggregator` receives model updates from :code:`Collaborator` s and aggregates them to create the new global model.

+- The :code:`Aggregator` is framework-agnostic, while the :code:`Collaborator` can use any deep learning frameworks, such as `TensorFlow `_\* \ or `PyTorch `_\*\.

-For this workflow, you modify the federation workspace to your requirements by editing the Federated Learning plan (FL plan) along with the Python\*\ code that defines the model and the data loader. The FL plan is a `YAML `_ file that defines the collaborators, aggregator, connections, models, data, and any other parameters that describe the training.

+For this workflow, one needs modify the federation workspace to their requirements by editing the Federated Learning plan (FL plan) along with the Python\*\ code that defines the model and the data loader. The FL plan is a `YAML `_ file that defines the collaborators, aggregator, connections, models, data, and any other parameters that describe the training.

.. _plan_settings:

+

+

+

+

](https://join.slack.com/t/openfl/shared_invite/zt-ovzbohvn-T5fApk05~YS_iZhjJ5yaTw)

-[](https://opensource.org/licenses/Apache-2.0)

-[](https://arxiv.org/abs/2105.06413)

[](https://bestpractices.coreinfrastructure.org/projects/6599)

](https://join.slack.com/t/openfl/shared_invite/zt-ovzbohvn-T5fApk05~YS_iZhjJ5yaTw)

-[](https://opensource.org/licenses/Apache-2.0)

-[](https://arxiv.org/abs/2105.06413)

[](https://bestpractices.coreinfrastructure.org/projects/6599)