首先声明,本次实验所用源代码修改自源代码。

(新增) 附上mAP随训练迭代次数的变化关系曲线。

(新增) 附上20类物品(也包括人)的测试准确率随迭代次数的变化关系曲线。

图像依次为:所有物品的变化曲线、前10类物品的变化曲线和后10类物品的变化曲线,之所以分开是为了方便看出变化趋势。

(新增) 各个迭代次数的模型验证测试集的结果附在后面(见验证结果)。

SSD300,迭代次数:55000。

下面是最终验证测试集的结果(略,见验证结果)。

将迭代次数增加到60000。

SSD300,迭代次数:60000。

下面是最终验证测试集的结果。(略,见验证结果)

本次实验的损失值随迭代次数的变化趋势如图16所示。

我修改了一下multibox_loss.py里面的forward函数,和网上主流介绍的稍微不同(我按照他们那么说的改,训练出的模型在运行test.py测试时,只能得到预测框的位置但没有预测的标记结果;运行eval.py时只是扫了一遍图片,没有給出任何信息;运行live.py时只是输出了原图)。如下所示。

def forward(self, predictions, targets):

"""Multibox Loss

Args:

predictions (tuple): A tuple containing loc preds, conf preds,

and prior boxes from SSD net.

conf shape: torch.size(batch_size,num_priors,num_classes)

loc shape: torch.size(batch_size,num_priors,4)

priors shape: torch.size(num_priors,4)

targets (tensor): Ground truth boxes and labels for a batch,

shape: [batch_size,num_objs,5] (last idx is the label).

"""

loc_data, conf_data, priors = predictions

num = loc_data.size(0)

priors = priors[:loc_data.size(1), :]

num_priors = (priors.size(0))

num_classes = self.num_classes

# match priors (default boxes) and ground truth boxes

loc_t = torch.Tensor(num, num_priors, 4)

conf_t = torch.LongTensor(num, num_priors)

for idx in range(num):

truths = targets[idx][:, :-1].data

labels = targets[idx][:, -1].data

defaults = priors.data

match(self.threshold, truths, defaults, self.variance, labels,

loc_t, conf_t, idx)

if self.use_gpu:

loc_t = loc_t.cuda()

conf_t = conf_t.cuda()

# wrap targets

loc_t = Variable(loc_t, requires_grad=False)

conf_t = Variable(conf_t, requires_grad=False)

pos = conf_t > 0

num_pos = pos.sum(dim=1, keepdim=True)

# Localization Loss (Smooth L1)

# Shape: [batch,num_priors,4]

pos_idx = pos.unsqueeze(pos.dim()).expand_as(loc_data)

loc_p = loc_data[pos_idx].view(-1, 4)

loc_t = loc_t[pos_idx].view(-1, 4)

loss_l = F.smooth_l1_loss(loc_p, loc_t, size_average=False)

# Compute max conf across batch for hard negative mining

batch_conf = conf_data.view(-1, self.num_classes)

loss_c = log_sum_exp(batch_conf) - batch_conf.gather(1, conf_t.view(-1, 1))

# Hard Negative Mining

loss_c = loss_c.view(num, -1)

loss_c[pos] = 0 # filter out pos boxes for now

_, loss_idx = loss_c.sort(1, descending=True)

_, idx_rank = loss_idx.sort(1)

num_pos = pos.long().sum(1, keepdim=True)

num_neg = torch.clamp(self.negpos_ratio*num_pos, max=pos.size(1)-1)

neg = idx_rank < num_neg.expand_as(idx_rank)

# Confidence Loss Including Positive and Negative Examples

pos_idx = pos.unsqueeze(2).expand_as(conf_data)

neg_idx = neg.unsqueeze(2).expand_as(conf_data)

conf_p = conf_data[(pos_idx+neg_idx).gt(0)].view(-1, self.num_classes)

targets_weighted = conf_t[(pos+neg).gt(0)]

loss_c = F.cross_entropy(conf_p, targets_weighted, size_average=False)

# Sum of losses: L(x,c,l,g) = (Lconf(x, c) + αLloc(x,l,g)) / N

# N = num_pos.data.sum()

# N = num_pos.data.sum().double()

loss_l = loss_l.double() # delete or remain?

loss_c = loss_c.double() # delete or remain?

# loss_l /= N # delete or remain?

# loss_c /= N # delete or remain?

return loss_l, loss_c 网上主流的改法似乎(因为个人感觉都语焉不详)是下面这样的。

def forward(self, predictions, targets):

"""Multibox Loss

Args:

predictions (tuple): A tuple containing loc preds, conf preds,

and prior boxes from SSD net.

conf shape: torch.size(batch_size,num_priors,num_classes)

loc shape: torch.size(batch_size,num_priors,4)

priors shape: torch.size(num_priors,4)

targets (tensor): Ground truth boxes and labels for a batch,

shape: [batch_size,num_objs,5] (last idx is the label).

"""

loc_data, conf_data, priors = predictions

num = loc_data.size(0)

priors = priors[:loc_data.size(1), :]

num_priors = (priors.size(0))

num_classes = self.num_classes

# match priors (default boxes) and ground truth boxes

loc_t = torch.Tensor(num, num_priors, 4)

conf_t = torch.LongTensor(num, num_priors)

for idx in range(num):

truths = targets[idx][:, :-1].data

labels = targets[idx][:, -1].data

defaults = priors.data

match(self.threshold, truths, defaults, self.variance, labels,

loc_t, conf_t, idx)

if self.use_gpu:

loc_t = loc_t.cuda()

conf_t = conf_t.cuda()

# wrap targets

loc_t = Variable(loc_t, requires_grad=False)

conf_t = Variable(conf_t, requires_grad=False)

pos = conf_t > 0

num_pos = pos.sum(dim=1, keepdim=True)

# Localization Loss (Smooth L1)

# Shape: [batch,num_priors,4]

pos_idx = pos.unsqueeze(pos.dim()).expand_as(loc_data)

loc_p = loc_data[pos_idx].view(-1, 4)

loc_t = loc_t[pos_idx].view(-1, 4)

loss_l = F.smooth_l1_loss(loc_p, loc_t, size_average=False)

# Compute max conf across batch for hard negative mining

batch_conf = conf_data.view(-1, self.num_classes)

loss_c = log_sum_exp(batch_conf) - batch_conf.gather(1, conf_t.view(-1, 1))

# Hard Negative Mining

loss_c = loss_c.view(num, -1)

loss_c[pos] = 0 # filter out pos boxes for now

_, loss_idx = loss_c.sort(1, descending=True)

_, idx_rank = loss_idx.sort(1)

num_pos = pos.long().sum(1, keepdim=True)

num_neg = torch.clamp(self.negpos_ratio*num_pos, max=pos.size(1)-1)

neg = idx_rank < num_neg.expand_as(idx_rank)

# Confidence Loss Including Positive and Negative Examples

pos_idx = pos.unsqueeze(2).expand_as(conf_data)

neg_idx = neg.unsqueeze(2).expand_as(conf_data)

conf_p = conf_data[(pos_idx+neg_idx).gt(0)].view(-1, self.num_classes)

targets_weighted = conf_t[(pos+neg).gt(0)]

loss_c = F.cross_entropy(conf_p, targets_weighted, size_average=False)

# Sum of losses: L(x,c,l,g) = (Lconf(x, c) + αLloc(x,l,g)) / N

# N = num_pos.data.sum()

N = num_pos.data.sum().double()

loss_l = loss_l.double() # delete or remain?

loss_c = loss_c.double() # delete or remain?

loss_l /= N # delete or remain?

loss_c /= N # delete or remain?

return loss_l, loss_c 区别就是有没有

N = num_pos.data.sum().double() 以及

loss_l /= N # delete or remain?

loss_c /= N # delete or remain? 看到不少大佬都这么写“修改第114行为……”,但我不明白的是直接替换掉第144行的代码,还是顺便把

loss_l /= N # delete or remain?

loss_c /= N # delete or remain? 也删了。

也就是,到底是改成:

N = num_pos.data.sum().double()

loss_l = loss_l.double()

loss_c = loss_c.double()

loss_l /= N

loss_c /= N

return loss_l, loss_c 还是改为:

N = num_pos.data.sum().double()

loss_l = loss_l.double()

loss_c = loss_c.double()

return loss_l, loss_c 我承认:无法理解大佬们的表达是因为我笨。

但是,我还是觉得这描述模棱两可。

图17和图18都是截至两个大佬描述的步骤,点击图号即可跳转至对应页面。

前面说过,按照他们的修改我得不到预期的实验结果。(可能是我操作有误或者理解错了大佬们的描述~)

按照他们的改法,得到的损失函数值随迭代次数的变化趋势似乎更“好看”一点,如图19所示。

将迭代次数增加到105000。

SSD300,迭代次数:105000。

下面是最终验证测试集的结果(略,见验证结果)。

A PyTorch implementation of Single Shot MultiBox Detector from the 2016 paper by Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang, and Alexander C. Berg. The official and original Caffe code can be found here.

- Install PyTorch by selecting your environment on the website and running the appropriate command.

- Clone this repository.

- Note: We currently only support Python 3+.

- Then download the dataset by following the instructions below.

- We now support Visdom for real-time loss visualization during training!

- To use Visdom in the browser:

# First install Python server and client pip install visdom # Start the server (probably in a screen or tmux) python -m visdom.server

- Then (during training) navigate to http://localhost:8097/ (see the Train section below for training details).

- Note: For training, we currently support VOC and COCO, and aim to add ImageNet support soon.

To make things easy, we provide bash scripts to handle the dataset downloads and setup for you. We also provide simple dataset loaders that inherit torch.utils.data.Dataset, making them fully compatible with the torchvision.datasets API.

Microsoft COCO: Common Objects in Context

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/COCO2014.shPASCAL VOC: Visual Object Classes

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2007.sh # <directory># specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2012.sh # <directory>- First download the fc-reduced VGG-16 PyTorch base network weights at: https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth

- By default, we assume you have downloaded the file in the

ssd.pytorch/weightsdir:

mkdir weights

cd weights

wget https://s3.amazonaws.com/amdegroot-models/vgg16_reducedfc.pth- To train SSD using the train script simply specify the parameters listed in

train.pyas a flag or manually change them.

python train.py- Note:

- For training, an NVIDIA GPU is strongly recommended for speed.

- For instructions on Visdom usage/installation, see the Installation section.

- You can pick-up training from a checkpoint by specifying the path as one of the training parameters (again, see

train.pyfor options)

To evaluate a trained network:

python eval.pyYou can specify the parameters listed in the eval.py file by flagging them or manually changing them.

| Original | Converted weiliu89 weights | From scratch w/o data aug | From scratch w/ data aug |

|---|---|---|---|

| 77.2 % | 77.26 % | 58.12% | 77.43 % |

GTX 1060: ~45.45 FPS

- We are trying to provide PyTorch

state_dicts(dict of weight tensors) of the latest SSD model definitions trained on different datasets. - Currently, we provide the following PyTorch models:

- SSD300 trained on VOC0712 (newest PyTorch weights)

- SSD300 trained on VOC0712 (original Caffe weights)

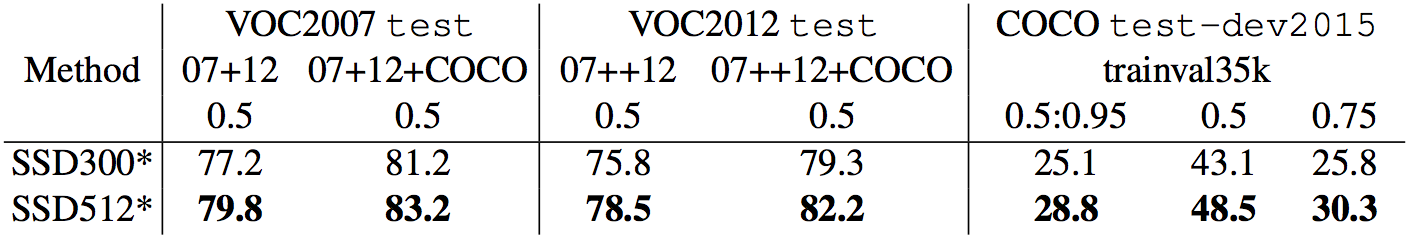

- Our goal is to reproduce this table from the original paper

- Make sure you have jupyter notebook installed.

- Two alternatives for installing jupyter notebook:

# make sure pip is upgraded

pip3 install --upgrade pip

# install jupyter notebook

pip install jupyter

# Run this inside ssd.pytorch

jupyter notebook- Now navigate to

demo/demo.ipynbat http://localhost:8888 (by default) and have at it!

- Works on CPU (may have to tweak

cv2.waitkeyfor optimal fps) or on an NVIDIA GPU - This demo currently requires opencv2+ w/ python bindings and an onboard webcam

- You can change the default webcam in

demo/live.py

- You can change the default webcam in

- Install the imutils package to leverage multi-threading on CPU:

pip install imutils

- Running

python -m demo.liveopens the webcam and begins detecting!

We have accumulated the following to-do list, which we hope to complete in the near future

- Still to come:

- Support for the MS COCO dataset

- Support for SSD512 training and testing

- Support for training on custom datasets

Note: Unfortunately, this is just a hobby of ours and not a full-time job, so we'll do our best to keep things up to date, but no guarantees. That being said, thanks to everyone for your continued help and feedback as it is really appreciated. We will try to address everything as soon as possible.

- Wei Liu, et al. "SSD: Single Shot MultiBox Detector." ECCV2016.

- Original Implementation (CAFFE)

- A huge thank you to Alex Koltun and his team at Webyclip for their help in finishing the data augmentation portion.

- A list of other great SSD ports that were sources of inspiration (especially the Chainer repo):