So some of the components were damaged while transporting the drone back and forth through multiple airplane trips. This means that I would need to buy new components to continue work on this project, which unfortunately, I don't have funds for :( This project will be put on hold until I can put together enough stuff to continue.

Decentralized drone swarm communication for search and rescue missions

Problems with current methods: Heavily reliant on global communication methods such as GPS and central communication unit

- Hardware

- Positioning

- PXFmini

- 3D Gyroscope

- Barometer

- 3D Compass

- PXFmini

- Flying

- Power

- LiPoly 1300mAh 4C

- Thrust

- 5045GF with RS2205 Motor

- Drone Frame

- 3D Printed

- Power

- Communications

- Low Power Bluetooth

- nRF52832

- Low Power Bluetooth

- Software

- Decentralized Autopilot with reinforcement learning

- input array: [n, r, phi, theta] where n is number of total drones, r is distance away in pixels, phi is azimuth angle, and theta is elevation angle.

- r is assumed to be infinity when out of range

- Cover area in smallest time possible while maintaining communication distance

- Cost function should be affected by total flight time and integral of the distance between drones

- Long-Short-Term-Memory Cell Network with outputs as velocity deltas (shape [dx, dy, dz])

- Visualization

- Python script to visualize position and direction of drones in drone swarm

- Training environment for autopilot

- Drone physics

- Acceleration

- Gravity

- Bounding boxes and collisions

- Air Resistance

- Object Detection

- YOLOv1 with modified class output, input size [448, 448]

- Dataset

- KITTI Vision Benchmark

- [WIP] COCO Dataset

- Hardware interfacing

wlx4cedfbb833a6 -- Asus AC56R Wifi Adapter (rtl8812au driver) -- Used for WiFi Access

wlp2s0 -- Builtin Intel Wifi Adapter -- Used for creating wireless access point

nmcli device status -- lists network interfaces and status

arp -a -- Lists all devices connected to hotspot

service network-manager restart -- restarts wifi service

sudo ./ArduCopter.elf -A tcp:10.42.0.74:8080 -B /dev/ttyAMA0 -- starts AutoPilot service where -A is serial connection and -B is GPS connection

ssh -i NVIDIA.pem [email protected] -- Connect to AWS Training Instance

http://forum.erlerobotics.com/t/gps-tutorial-how-to-address-a-no-fix/1009

-

Calibrate GPS Outside (if this doesn't work try http://forum.erlerobotics.com/t/gps-tutorial-how-to-address-a-no-fix/1009)

-

Fix RPi Camera

-

Calibrate ESCs

-

Test Motors

-

Flight test

-

Get location from PXFMini

-

Evaluate types of machine learning (look into DQNs)

-

Create training scenario

-

Fix documentation for project

-

Setup hotspot on laptop

-

Configure Erle PXFMini

-

Build and compile APM for PXFMini and Pi0

-

Buy USB Wifi Dongle

-

Buy micro-HDMI to HDMI Adapter

-

Visualize convolutional kernels

-

Reimplement batch norm

-

Add YOLOv1 Full Architecture

-

Attempt restore weights

-

Switch to dropout

-

Switch to tiny-YOLO architecture

-

Make validation testing more frequent

-

Validation time image saving

-

Refresh dataset

-

Add documentation for detectionNet

-

Reshape func

-

Define TP / TN / FP / FN

-

Fix non-max suppression

-

Calculate IOU

-

Ensure weight saving saves current epoch

-

Add test evaluation

-

Add checkpoint saving

-

Add ability to restore weights

-

Add augmentation after read

-

np Pickle Writer

-

Implement data augmentation

-

Add batch norm

-

Interpret output with confidence filtering

-

Linearize last layer

-

Normalize xy

-

Normalize wh

-

Add label output in Tensorboard

-

Add checkpoint saving

-

Add FC Layers

-

Make sure capable of overfitting

-

imshow Output

-

Interpret Output

-

Check labels for accuracy

-

Check values of init labels

-

Update descriptions about dataset

-

Update software description in README

-

Calibrate PiCamera (Completed)

-

Solder motors to ESCs (Completed Nov. 1st)

-

Screw in new camera and PDB mount (Completed Nov. 1st)

-

Install new propellers (Completed Oct. 31st)

-

Write function to calibrate camera intrinsic matrix (Completed Oct. 31st)

-

Implement photo functionality into camera class (Completed Oct. 31th)

-

Design camera mount (Completed Oct. 29th)

-

Design power distributor board model (Completed Oct. 30th)

-

Recut and redrill carbon fiber tubes (Completed Oct. 26th)

-

Reorder 2 propellers and LiPo charger (Completed Oct. 27th)

-

Add Camera class and integrate into Drone class (Completed Oct. 23th)

-

Add directionality to Drone class (Completed Oct. 23th)

-

Evaluate plausibility of homogenous coordinate system (Completed Oct. 22th)

-

Cut and drill carbon fiber tubes (Completed Oct. 19th)

-

Finish Drone Summary (Completed Oct. 19th)

-

Draw Circuits (Completed Oct. 19th)

-

Explain details of motors, thrust, etc. (Completed Oct. 19th)

-

Update Printing Logs (Completed Oct. 18th)

-

Find Thrust and Voltages required given current motors (Completed Oct. 18th)

-

Decide on Power Supply (Completed Oct. 18th)

-

Determine right electronic speed controller (ESC) to buy (Completed Oct. 18th)

-

Print Arms (Completed Oct. 18th)

-

Calculate Approximate Weight of each Drone (Completed Oct. 17th)

-

Print Carbon Fiber Jigs (Completed Oct.17th)

-

Print Bumpers (Completed Oct. 16th)

-

Print Sides (Completed Oct. 16th)

-

Print Lower Plate (Completed Oct. 16th)

-

Print Top Plate (Completed Oct. 16th)

-

Order PXFMini parts (Completed Oct. 15th)

-

Order Firefly Parts (Completed Oct. 12th)

-

Realtime rendering of 3D Space (Completed Oct. 2)

-

Particle based display for drone (Completed Sept. 28)

-

Create initial variables for drones (Completed Sept. 26)

-

Create drone class (Completed Sept. 26)

A modified version of YOLOv1 is implemented

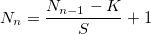

Dimensionality of the next layer can be computed as follows:

3 Different models have been implemented as follows:

- SqueezeNet

| Layer | Output Dimensions | Filter Size | Stride | Depth |

|---|---|---|---|---|

| images | [448, 448, 3] | - | - | - |

| conv1 | [224, 224, 96] | [7, 7] | [2, 2] | 96 |

| maxpool1 | [112, 112, 96] | [3, 3] | [2, 2] | - |

| fire1 | [112, 112, 64] | - | - | 64 |

| fire2 | [112, 112, 64] | - | - | 64 |

| fire3 | [112, 112, 128] | - | - | 128 |

| maxpool2 | [56, 56, 128] | [3, 3] | [2, 2] | - |

| fire4 | [56, 56, 128] | - | - | 256 |

| fire5 | [56, 56, 192] | - | - | 384 |

| fire6 | [56, 56, 192] | - | - | 384 |

| fire7 | [56, 56, 256] | - | - | 512 |

| maxpool3 | [28, 28, 256] | [3, 3] | [2, 2] | - |

| fire8 | [28, 28, 256] | - | - | 512 |

| avgpool1 | [12, 12, 256] | [7, 7] | [2, 2] | - |

| fc1 | [1024] | - | - | -1 (flatten) |

| fc2 | [4096] | - | - | - |

| fc3 | [675] | - | - | - |

- Tiny YOLOv1

| Layer | Output Dimensions | Filter Size | Stride | Depth |

|---|---|---|---|---|

| images | [448, 448, 3] | - | - | - |

| conv1 | [448, 448, 16] | [3, 3] | [1, 1] | 16 |

| maxpool1 | [224, 224, 16] | [2, 2] | [2, 2] | - |

| conv2 | [224, 224, 32] | [3, 3] | [1, 1] | 32 |

| maxpool2 | [112, 112, 32] | [2, 2] | [2, 2] | - |

| conv3 | [112, 112, 64] | [3, 3] | [1, 1] | 64 |

| maxpool3 | [56, 56, 64] | [2, 2] | [2, 2] | - |

| conv4 | [56, 56, 128] | [3, 3] | [1, 1] | 128 |

| maxpool4 | [28, 28, 128] | [2, 2] | [2, 2] | - |

| conv5 | [28, 28, 256] | [3, 3] | [1, 1] | 256 |

| maxpool5 | [14, 14, 256] | [2, 2] | [2, 2] | - |

| conv6 | [14, 14, 512] | [3, 3] | [1, 1] | 512 |

| maxpool6 | [7, 7, 512] | [2, 2] | [2, 2] | - |

| conv7 | [7, 7, 1024] | [3, 3] | [1, 1] | 1024 |

| conv8 | [7, 7, 256] | [3, 3] | [1, 1] | 256 |

| conv9 | [7, 7, 512] | [3, 3] | [1, 1] | 512 |

| fc1 | [1024] | - | - | -1 (flatten) |

| fc2 | [4096] | - | - | - |

| fc3 | [675] | - | - | - |

- YOLOv1

| Layer | Output Dimensions | Filter Size | Stride | Depth |

|---|---|---|---|---|

| images | [448, 448, 3] | - | - | - |

| conv1 | [224, 224, 64] | [7, 7] | [2, 2] | 64 |

| maxpool1 | [112, 112, 64] | [2, 2] | [2, 2] | - |

| conv2 | [112, 112, 192] | [3, 3] | [1, 1] | 192 |

| maxpool2 | [56, 56, 192] | [2, 2] | [2, 2] | - |

| conv3 | [56, 56, 128] | [1, 1] | [1, 1] | 128 |

| conv4 | [56, 56, 256] | [3, 3] | [1, 1] | 256 |

| conv5 | [56, 56, 256] | [1, 1] | [1, 1] | 256 |

| conv6 | [56, 56, 512] | [3, 3] | [1, 1] | 512 |

| maxpool3 | [28, 28, 512] | [2, 2] | [2, 2] | - |

| conv7 | [28, 28, 256] | [1, 1] | [1, 1] | 256 |

| conv8 | [28, 28, 512] | [3, 3] | [1, 1] | 512 |

| conv9 | [28, 28, 256] | [1, 1] | [1, 1] | 256 |

| conv10 | [28, 28, 512] | [3, 3] | [1, 1] | 512 |

| conv11 | [28, 28, 256] | [1, 1] | [1, 1] | 256 |

| conv12 | [28, 28, 512] | [3, 3] | [1, 1] | 512 |

| conv13 | [28, 28, 256] | [1, 1] | [1, 1] | 256 |

| conv14 | [28, 28, 512] | [3, 3] | [1, 1] | 512 |

| conv15 | [28, 28, 512] | [1, 1] | [1, 1] | 512 |

| conv16 | [28, 28, 1024] | [3, 3] | [1, 1] | 1024 |

| maxpool4 | [14, 14, 1024] | [2, 2] | [2, 2] | - |

| conv17 | [14, 14, 512] | [1, 1] | [1, 1] | 512 |

| conv18 | [14, 14, 1024] | [3, 3] | [1, 1] | 1024 |

| conv19 | [14, 14, 512] | [1, 1] | [1, 1] | 512 |

| conv20 | [14, 14, 1024] | [3, 3] | [1, 1] | 1024 |

| conv21 | [14, 14, 1024] | [3, 3] | [1, 1] | 1024 |

| conv22 | [7, 7, 1024] | [3, 3] | [2, 2] | 1024 |

| conv23 | [7, 7, 1024] | [3, 3] | [1, 1] | 1024 |

| conv24 | [7, 7, 1024] | [3, 3] | [1, 1] | 1024 |

| fc2 | [4096] | - | - | -1 (flatten) |

| fc3 | [675] | - | - | - |

Batch normalization is applied after every conv and fc layer before leaky ReLu

Input Format: img - [batchsize, 448, 448, 3]

labels - [batchsize, sx, sy, B * (C + 4)] where B is number of bounding boxes per grid cell (3) and C is number of classes (4)

Note: Form [p1x, p1y, p2x, p2y] have been converted to form [x,y,w,h]

Two constants are set to correct the unbalance between obj and no_obj boxes,

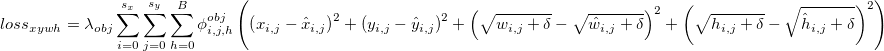

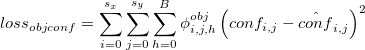

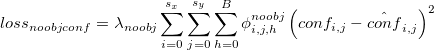

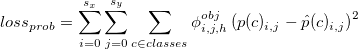

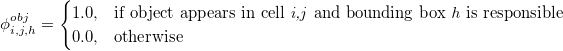

YOLO works by adding the following seperate losses,

Where,

lossXYWH is the sum of the squared errors of the x,y,w,h values of bounding boxes for all squares and bounding boxes responsible

lossObjConf is the sum of the squared errors in predicted confidences of all bounding boxes with objects

lossNoobjConf is the sum of the squared errors in predicted confidences of all grid cells with no objects

lossProb is the sum of the squared errors in predicted class probabilities across all grid cells

Variable Definitions:

phi is defined as

sx, sy are defined as number of horizontal grid cells and vertical grid cells, respectively classes is the list of total possible classes B is the number of bounding boxes per grid cell

A fire module (described in SqueezeNet) is defined as 3 1x1 conv2d layers followed by 4 1x1 conv2d layers concatenated with 4 3x3 conv2d layers

Tensorflow version == 1.2.0

GPU == NVIDIA 970M

Batchsize == 4

Learning Rate == 1e-3

Optimizer == Adam Optimizer

Adam Epsilon == 1e-0

Batch Normalization Momentum == 0.9

Batch Normalization Epsilon == 1e-5

sx == 7

sy == 7

B == 3

C == 4

Alpha (Leaky ReLu coefficient) = 0.1

Of format 000000.png

Training set size: ~7500 images

Testing set size: ~7500 images

Various dimensions from [1224, 370] to [1242, 375]

Images

- Resizing all uniform [1242, 375] with cubic interpolation

- Crop random to [375, 375]

- Normalize to range [0., 1.]

- Pad size to [448, 448]

Labels

- Discard all labels that are not Car / Pedestrian / Cyclist / Misc. Vehicle (Truck or Van)

- Discard all labels outside crop range

- One-hot encode labels

- Convert p1p2 to xywh

- Assign boxes to cells

- Normalize w,h to cell dimensions

- Normalize x,y to image dimensions

- Append obj, no_obj, objI boolean masks

All annotations are white-space delimited

Object Annotations

Of format 000000.txt

15 Columns of separated data

-1 is default value if field does not apply

- Class - Car, Van, Truck, Pedestrian, Person Sitting, Cyclist, Tram, Misc., Don't Care

- Truncated - If the bounding box is truncated (leaves the screen)

- Occluded - If the bounding box/class is partially obscured

- Observation angle - Range from -pi to pi

- Bounding box x_min

- Bounding box y_min

- Bounding box x_max

- Bounding box y_max

- 3D - x dimension of object

- 3D - y dimension of object

- 3D - z dimension of object

- x location

- y location

- z location

- ry rotation around y-axis

For simplicity sake, only columns 1,5,6,7,8 will be used.

| Qty | Item | Total Weight (g) | Price |

|---|---|---|---|

| 1x | 3D Printed Frame | 50.00g | N/A |

| 1x | LiPo Battery | 165.00g | $19.10 |

| 4x | Electronic Speed Controller | 28.00g | $50.68 |

| 1x | Power Distribution Board | 19.30g | $4.13 |

| 4x | 100mm Carbon Fiber Tubes | 45.20g | $7.99 |

| 1x | PXFmini Power Module | 50.00g | $44.94 |

| 1x | PXFmini | 15.00g | $103.36 |

| 4x | Motors | 120.00g | $39.96 |

| 4x | Propellers | 21.2g | $8.76 |

| 1x | Pi Zero | 9.00g | $5.00 |

| 1x | Pi Camera | 18.14g | $14.99 |

| N/A | Misc. Wires | 20.0g | N/A |

| Totals | N/A | 560.84g | $298.91 |

Battery: 1300mAh at 45C. Max recommended current draw = Capacity (Ah) * C Rating = 1.3*45 = 58.5A

Thrust Required: Because the drone is not intended for racing, a thrust to weight ratio of 4:1 works well. A total thrust of 2.2433kg is required, meaning 560g of thrust per motor.

Current: Looking at the thrust table below, we can see that 13A @ 16.8V on a RS2205-2300KV with GF5045BN Propellers nets us almost exactly 560g of thrust. Multiplying the current for each motor, we end up with a maximum total current of 52A, well below the 58.5 theoretical maximum of the LiPo battery used.

Flight Time: We can find the current for which all motors provide enough thrust to keep the drone in the air (560g total or 140g each). Looking at the thrust table, we can see that a little less than 3A nets us around 140g of thrust. Assuming only 80% of capacity is effective, we 1.04mAh available. Multiplying by h/60min and dividing by the current draw 3A, we get a theoretical flight time of 20.8 minutes.

| Current (A) | Thrust (g) | Efficiency (g/W) | Speed (RPM) |

|---|---|---|---|

| 1 | 76 | 4.75 | 7220 |

| 3 | 183 | 3.81 | 10790 |

| 5 | 282 | 3.54 | 13030 |

| 7 | 352 | 3.10 | 14720 |

| 9 | 426 | 2.93 | 16180 |

| 11 | 497 | 2.82 | 17150 |

| 13 | 560 | 2.69 | 18460 |

| 15 | 628 | 2.62 | 19270 |

| ... | ... | ... | ... |

| 27 | 997 | 2.28 | 23920 |

| 30 | 1024 | 2.14 | 24560 |

- 4x 3pin motor to ESC female to female connector

- 4x M3 Locknuts

- 2x M3x22mm spacers

- 4x M3x32mm

- 4x M3 Washers

- 32x M2x10mm

- 8x M3x10mm

- 8x M3x6mm

- 12x M3x8mm

- 1x Power Distribution Board - HOBBY KING LITE

- 4x ESC - Favourite Little Bee 20A 2-4S

- 1x Erle Robotics PXFmini

- 1x Erle Robotics PXFmini Power Module

- 1x Turnigy Graphene 1300mAh 4S 45C LiPo Pack w/ XT60

- 4x EMAX RS2205 Brushless Motor

- 4x GEMFAN 5045 GRP 3-BLADE Propellers

- 4x 100mm Carbon Fiber Tube w/ Diameter of 12mm

- 1x Pi Camera at 5MP

Using a modified version of the 3D printed Firefly drone (http://firefly1504.com)

Files located in Firefly Drone Parts\MainFrame

Print Bumper_v2_f.stl and 2x side.stld with 50% infill

Print Lower_plate_V2.stl, Top_plate_front_3mm.stl, and Top_plate_rear_3mm.stl with 25% infill

Files located in Firefly Drone Parts\Arms

Print 8x cliplock and rest of parts with 25% infill

Files located in Firefly Drone Parts\Drill Jig

Print all parts with 25% infill

Using the jigs, cut 500mm tube into 4x 100mm tubes Drill 3mm and 4mm holes into the tubes through the jigs

Nov. 1st Update 2

Progress Update!

Nov. 1st Update 2

Progress Update!

Nov. 1st Update 1

Soldered misconnection between PXFMini and Pi0 and wires between motors and ESCs

Nov. 1st Update 1

Soldered misconnection between PXFMini and Pi0 and wires between motors and ESCs

Oct. 29th Update 1

Mounted battery, Pi0, PXFMini, and Pi Camera.

Oct. 29th Update 1

Mounted battery, Pi0, PXFMini, and Pi Camera.

Oct. 24th Update 3

Progress update!

Oct. 24th Update 3

Progress update!

Oct. 24th Update 2

Attaching motors to arms with the motor mounts with 2x M3 x 10mm and 4x M2 x 8mm

Oct. 24th Update 2

Attaching motors to arms with the motor mounts with 2x M3 x 10mm and 4x M2 x 8mm

Oct. 24th Update 1

New parts arrived! Need to reorder propellers (2 are clockwise orientation) and a LiPo battery charger.

Oct. 24th Update 1

New parts arrived! Need to reorder propellers (2 are clockwise orientation) and a LiPo battery charger.

Oct. 19th Update 2

Began construction of actual drone! Still waiting for new motors to begin arm assembly but main frame is done!

Oct. 19th Update 2

Began construction of actual drone! Still waiting for new motors to begin arm assembly but main frame is done!

Oct. 19th Update 2

PXFMini and power module arrived! I also went out and got various screws, nuts, and washers for the assembly.

Oct. 19th Update 2

PXFMini and power module arrived! I also went out and got various screws, nuts, and washers for the assembly.

Oct. 18th Update 1

Finished printing remaining pieces! Some issues cleaning support material off inner clamps but was sanded out. Carbon Fiber tube was cut with drill jig successfully!

Oct. 18th Update 1

Finished printing remaining pieces! Some issues cleaning support material off inner clamps but was sanded out. Carbon Fiber tube was cut with drill jig successfully!

Oct. 16th Update 3

Motors, Propellers, Carbon Fiber Tubes, Dampener Balls, and Camera arrived!

Oct. 16th Update 3

Motors, Propellers, Carbon Fiber Tubes, Dampener Balls, and Camera arrived!

Oct. 16th Update 2

All Mainframe Materials printed successfully!

Oct. 16th Update 2

All Mainframe Materials printed successfully!

Oct. 16th Update 1

Printed Bumper_v2.stl and side.stl successfully! Lower_plate_V2 printed with wrong orientation and extra support material. Requeued.

Oct. 16th Update 1

Printed Bumper_v2.stl and side.stl successfully! Lower_plate_V2 printed with wrong orientation and extra support material. Requeued.

http://docs.erlerobotics.com/brains/pxfmini/software/apm

Ardupilot (APM)

http://ksimek.github.io/2013/08/13/intrinsic/

Explanation of camera matrices

https://homes.cs.washington.edu/~seitz/papers/cvpr97.pdf

Paper detailing a proposal for coloured scene reconstruction by calibrated voxel colouring

https://people.csail.mit.edu/sparis/talks/Paris_06_3D_Reconstruction.pdf

Presentation slide deck by Sylvian Paris on different methods for 3D reconstruction from multiple images

https://web.stanford.edu/class/cs231a/course_notes/03-epipolar-geometry.pdf

Stanford CS231A lecture notes on epipolar geometry and 3D reconstruction

http://pages.cs.wisc.edu/~chaol/cs766/

Assignment page from the University of Wisconsin for uncalibrated stereo vision using epipolar geometry

http://journals.tubitak.gov.tr/elektrik/issues/elk-18-26-2/elk-26-2-11-1704-144.pdf

Paper detailing a proposed 3D reconstruction pipeline given camera calibration matrices

OpenCV Camera Calibration

OpenCV Camera Calibration

https://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html

Camera Calibration and 3D Reconstruction