Project | Paper | Usage | Citation

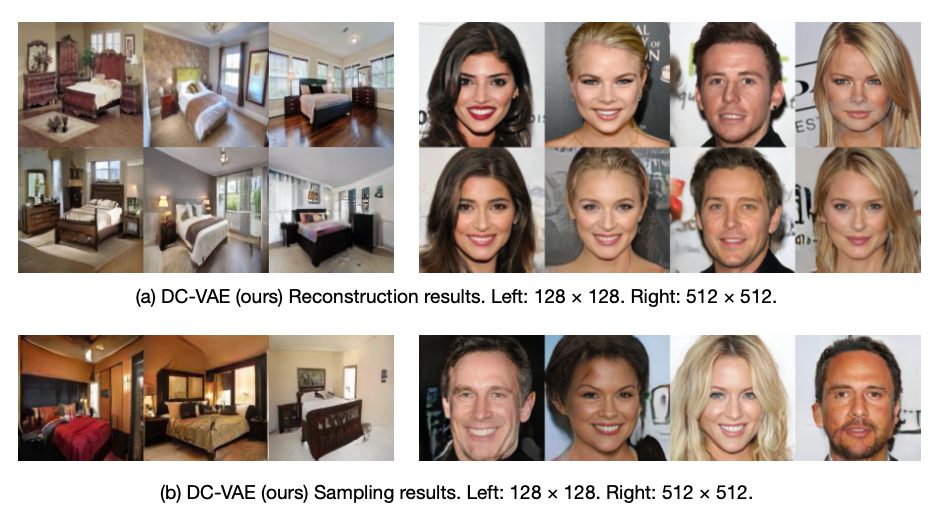

A generative autoencoder model with dual contradistinctive losses to improve generative autoencoder that performs simultaneous inference (reconstruction) and synthesis (sampling). Our model, named dual contradistinctive generative autoencoder (DC-VAE), integrates an instance-level discriminative loss (maintaining the instance-level fidelity for the reconstruction/synthesis) with a set-level adversarial loss (encouraging the set-level fidelity for there construction/synthesis), both being contradistinctive.

-

Clone the repository

git clone https://github.com/mlpc-ucsd/DC-VAE cd DC-VAE -

Setup the conda environment

conda env create -f dcvae_env.yml conda activate dcvae_env -

Train the network on CIFAR-10

python train.py

If you find this project useful for your research, please cite the following work.

@InProceedings{Parmar_2021_CVPR,

author = {Parmar, Gaurav and Li, Dacheng and Lee, Kwonjoon and Tu, Zhuowen},

title = {Dual Contradistinctive Generative Autoencoder},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {823-832}

}

We found the following libraries helpful in our research.

- FID - computing the FID score

- IS - computing the Inception Score.

- AutoGAN - model architecture for the low resolution experiments experiments

- ProGAN - model architecture for the high resolution experiments.

This work is funded by NSF IIS- 1717431 and NSF IIS-1618477. We thank Qualcomm Inc. for an award support. The work was performed when G. Parmar and D. Li were with UC San Diego.