- Install dependencies (below)

- Clone repository

- Make sure you have your Twitter API keys handy if you are gathering any Twitter Data

- Each tool and their usage is described on the README file on each category of tools folder.

All the libraries used in this toolkit can be installed using the following command.

sh requirements.sh

Note: If you would like to setup headless browsing automation tasks, please install additional dependencies given below.

-

Python 3+

pip install spacy

python -m spacy download en

python -m spacy download en_core_web_sm

-

Twarc

pip install twarc -

Tweepy v3.8.0

pip install tweepy -

argparse - v3.2

pip install argparse -

xtract - v0.1a3

pip install xtract

NOTE: If you are using the scraping utility, install the following dependencies. These dependencies are needed for the headless browsing automation tasks (no need to have a screen open for them). Configuration of these items is very finicky but there is plenty of documentation online.

-

Xvf

sudo yum install Xvfb -

Firefox

sudo yum install firefox -

selenium

pip install -U selenium -

pyvirtualdisplay - v0.25

pip install pyvirtualdisplay -

GeckoDriver - v0.26.0

sudo yum install jq

and then use the provided utility:

bash SMMT/data_acquisition/geckoDriverInstall.sh

If you still have issues or the Firefox window is popping up through your X11, follow this: https://www.tienle.com/2016/09-20/run-selenium-firefox-browser-centos.html

This is a very important step, if you do not have any Twitter API keys, none of the software that uses Twitter API will work without it

If you used SMMT and liked it, please cite the following paper:

R Tekumalla and JM Banda. "Social Media Mining Toolkit (SMMT)". Genomics & Informatics, 18, (2), 2020. https://doi.org/10.5808/GI.2020.18.2.e16

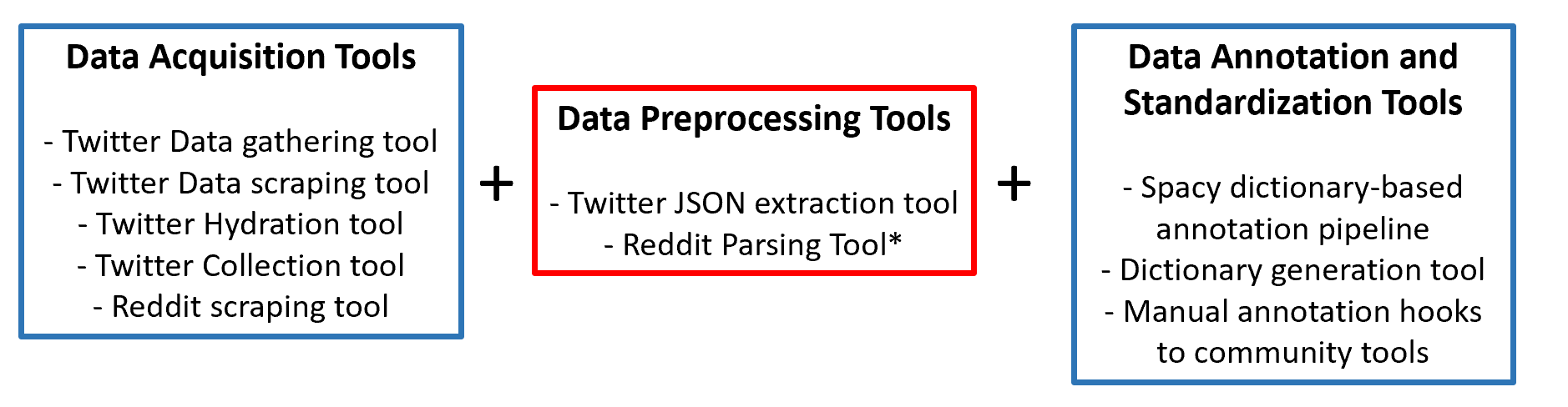

- Twitter hydration tool - This script will hydrate tweet ID’s provided by others.

- Twitter gathering tool - This script will allow users to specify hashtags and capture from the twitter faucet new tweets with the given hashtag.

- Twitter JSON extraction tool - While seemingly trivial, most biomedical researchers do not want to work with JSON objects. This tool will take the fields the researcher wants and output a simple to use CSV file created from the provided data.

- Spacy dictionary-based annotation pipeline This is the tool that will require the most work during the hackathon. This pipeline will be available as a service as well, with the user providing their dictionaries and feeding data directly.

- Dictionary generation tool This tool will transform ontologies or provided dictionary files into spacy compliant dictionaries to use with the previous pipeline.

- Manual annotation hooks to tools like brat annotation tools

This work was conceptualized for/and (mostly) carried out while at the Biomedical Linked Annotation Hackathon 6 in Tokyo, Japan.

We are very grateful for the support on this work.